At Microsoft Ignite this past September, Microsoft made some announcements around Azure. One of these announcements was the general availability of multiple VIPs on internal load balancers. Why is this so important to a SQL Server DBA? Well, up until now if you want to deploy highly available SQL Server in Azure you were limited to a single SQL Server FCI per cluster or a single Availability Group listener.

This limitation forced you to deploy a new cluster for each instance of SQL Server you wanted to protect in a Failover Cluster. It also forced you to group all of your databases into a single Availability Group if you wanted automatic failover and client redirection in your AlwaysOn AG configuration.

Those restrictions have now been lifted with these new ILB features. In this post I am going to walk you through the process of deploying a SQL Server FCI in Azure that contains two SQL Server instances. In a future post I will walk you through the same process for SQL Server AlwaysOn AG.

Let’s start with a basic, single instance SQL Server FCI in Azure as I describe in my post Deploying Microsoft SQL Server 2014 Failover Clusters in Azure Resource Manager .

That post describes the process of creating the cluster, using DataKeeper to create the replicated volume resources used in the cluster, creating the Internal Load Balancer (ILB) and then fixing the SQL Server Cluster IP Resource to work with the ILB. If you want to skip that process and jumpstart your configuration you can always use the Azure Deployment Template that creates a 2-Node SQL Server FCI using SIOS DataKeeper

Assuming you now have a basic two node SQL Server FCI, the steps to add a 2nd named instance are as follows:

- Create another DataKeeper Volume Resource on another volume that is not currently being used. You may need to add additional disks to your Azure instance if you have no available volumes. As part of this volume creation process the new DataKeeper Volume resource will be registered in Available Storage in the cluster. Refer to the article referenced earlier for the details.

- Install a named instance of SQL Server on the first node, specifying the DataKeeper Volume that we just created as the storage location.

- “Add a node” to the cluster on the second node.

- Lock down the port number of this new named instance to a port that is not in use. In my example I use port 1440.

Next we have to adjust the ILB to redirect traffic to this second instance. Here are the steps you need to follow:

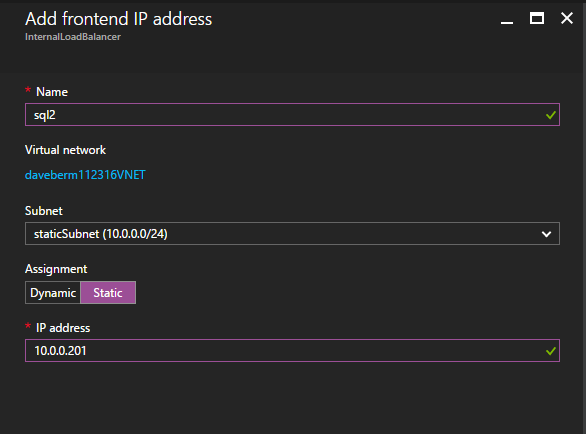

Add a frontend IP address that is identical to the SQL cluster IP address you used for the second instance of SQL Server as shown below.

Next, we will need to add another probe since the instances could be running on different servers. As shown below, I added a probe that probes port 59998 (instead of the usual 59999). We will need to make sure the new rules reference this proble. We will also need to remember that port number since we will need to update IP address associated with this instance during the last step of this process.

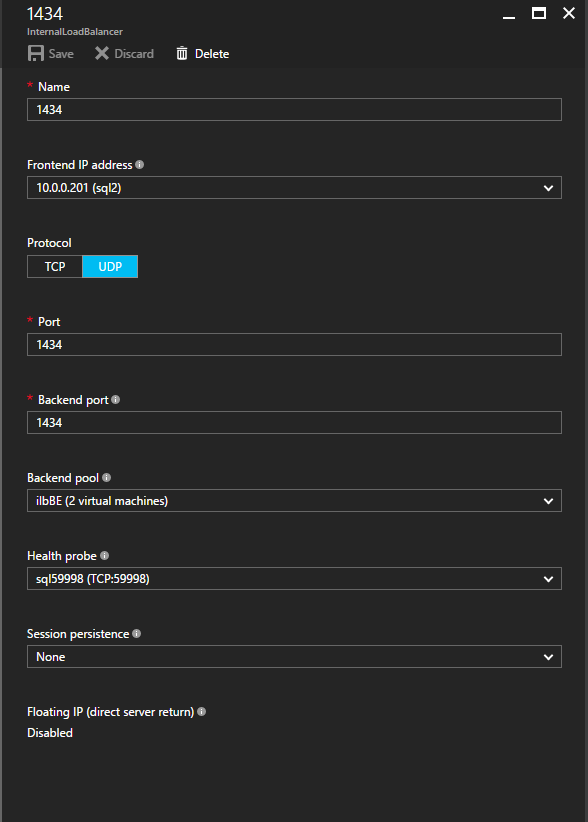

Now we need to add two new rules to the ILB to direct traffic destined for this 2nd instance of SQL. Of course we need to add a rule to redirect TCP port 1440 (the port I used for the named instance of SQL), but because we are now using named instances we will also need to have a port to support the SQL Server Browser Service, UDP Port 1434.

In the picture below depicting the rule for the SQL Server Browser Service, take note that the Front End IP Address is referencing the new FrontendIP address (10.0.0.201), UDP port 1434 for both the Port and Backend Port. In the pool you will need to specify the two servers in the cluster, and finally make sure you choose the new Health Probe we just created.

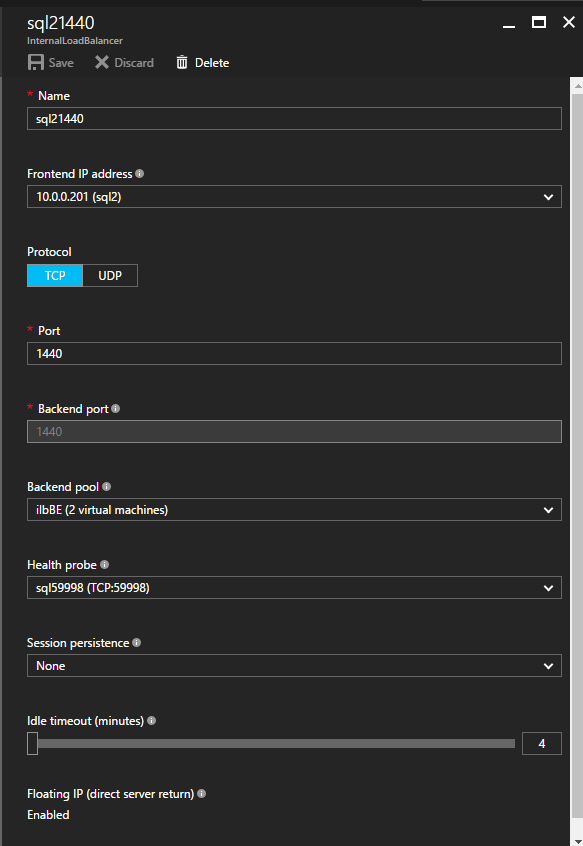

We will now add a rule for TCP/1440. As show in the picture below, add a new rule for port TCP 1440, or whatever port locked down for the named instance of SQL Server. Again, be sure to choose the new FrontEnd IP Address and the new Health Probe (59998). Also, make sure the Floating IP (direct server return) is enabled.

Now that the load balancer is configured, the final step is to run the PowerShell script to update the new Cluster IP address associated with this 2nd instance of SQL Server. This PowerShell script only needs to be run on one of the cluster nodes.

# Define variables

$ClusterNetworkName = “”

# the cluster network name (Use Get-ClusterNetwork on Windows Server 2012 of higher to find the name)

$IPResourceName = “”

# the IP Address resource name of the second instance of SQL Server

$ILBIP = “”

# the IP Address of the second instance of SQL, which should be the same as the new Frontend IP address as well

Import-Module FailoverClusters

# If you are using Windows Server 2012 or higher:

Get-ClusterResource $IPResourceName | Set-ClusterParameter -Multiple @{Address=$ILBIP;ProbePort=59998;SubnetMask="255.255.255.255";Network=$ClusterNetworkName;EnableDhcp=0}

# If you are using Windows Server 2008 R2 use this:

#cluster res $IPResourceName /priv enabledhcp=0 address=$ILBIP probeport=59998 subnetmask=255.255.255.255

You now have a fully functional multi-instance SQL Server FCI in Azure. Let me know if you have any questions.