So you just download the bits for Windows Server 8 Beta and you are anxious to try out all the great new features including Windows Storage Spaces, Continuously Available Fail Servers and Hyper-V Availability. Many of those new features are going to require you become familiar with Windows Server Failover Clustering. In addition, things like Storage Spaces are going require that you have access to additional storage to simulate JBODS. Windows iSCSI Target Software is a great way for you to provide storage for Failover Clustering and Spaces in a lab environment so you can play around with these new features.

This Step-by-Step Article assumes you have three Windows Server 8 servers running in a domain environment. My lab environment consists of the following:

Hardware

My three servers are all virtual machines running on VMware Workstation 8 on top of my Windows 7 laptop with 16 GB of RAM. See my article on how to install Windows Server 8 on VMware Workstation 8.

Server Names and Roles

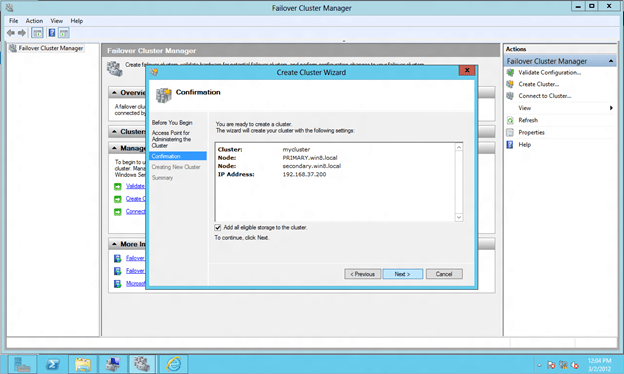

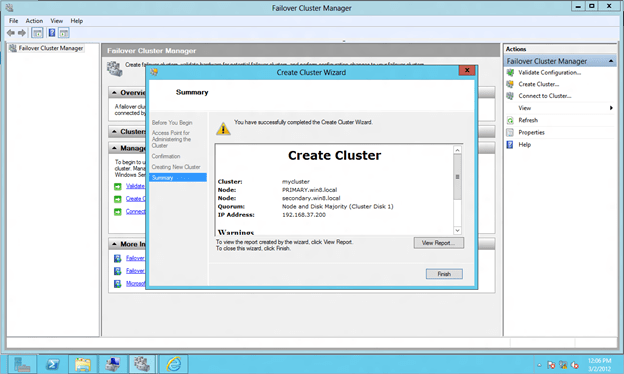

PRIMARY.win8.local – my cluster node 1

SECONDARY.win8.local – my cluster node 2

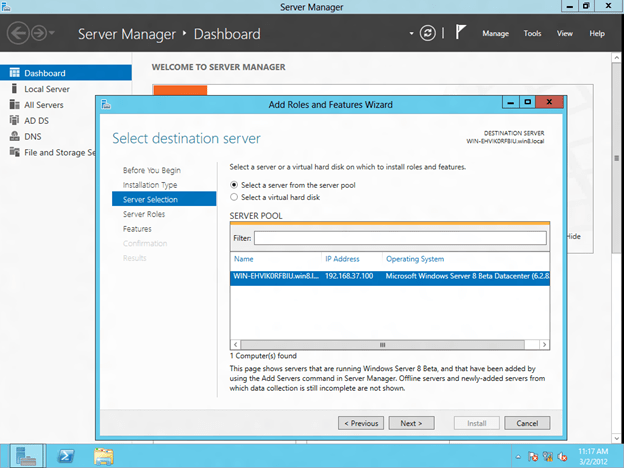

WIN-EHVIK0RFBIU.win8.local – my domain controller (guess who forgot to rename his DC before I promoted it to be a Domain ControllerJ)

Network

192.168.37.X/24 – my public network also used to carry iSCSI traffic

10.X.X.X /8– a private network defined just between PRIMARY and SECONDARY for cluster communication

This article is going to walk you through step-by-step on how to do the following:

- Install the iSCSI Target Role on your Domain Controller

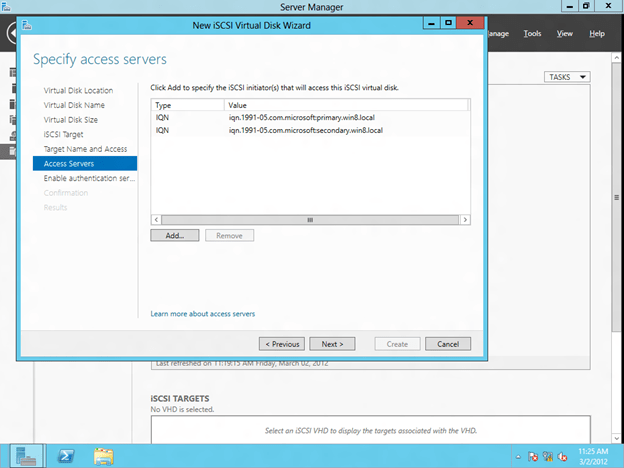

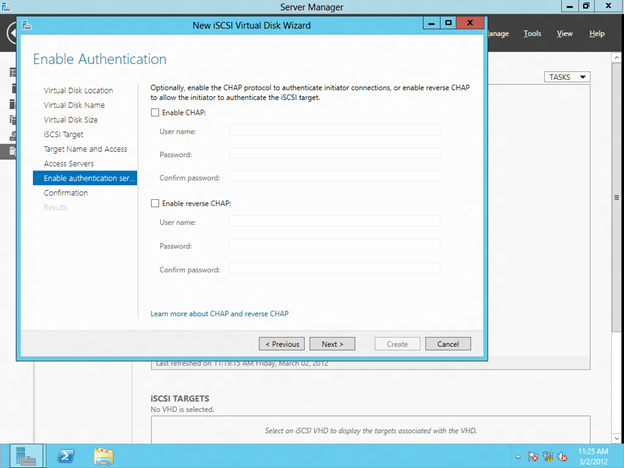

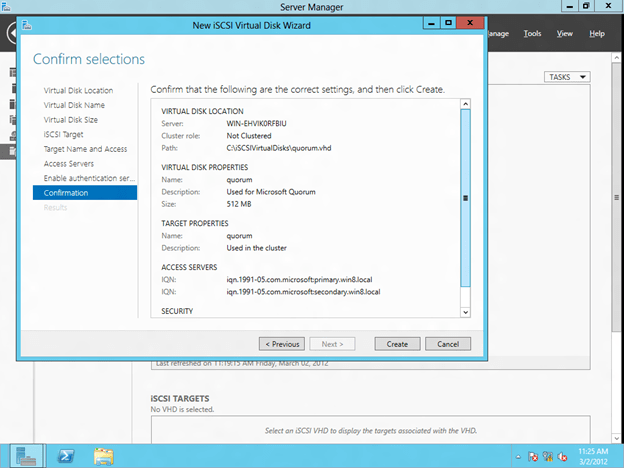

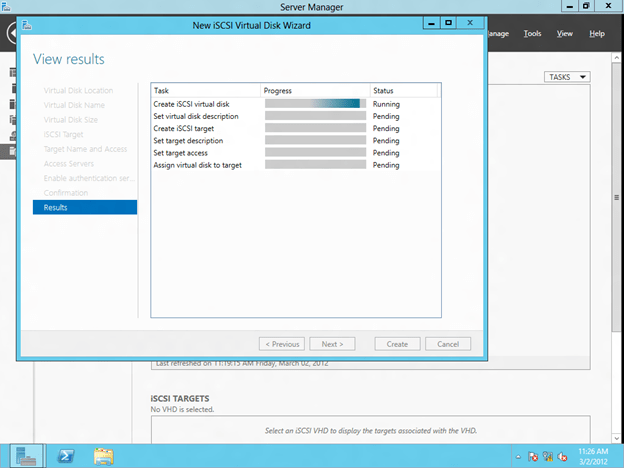

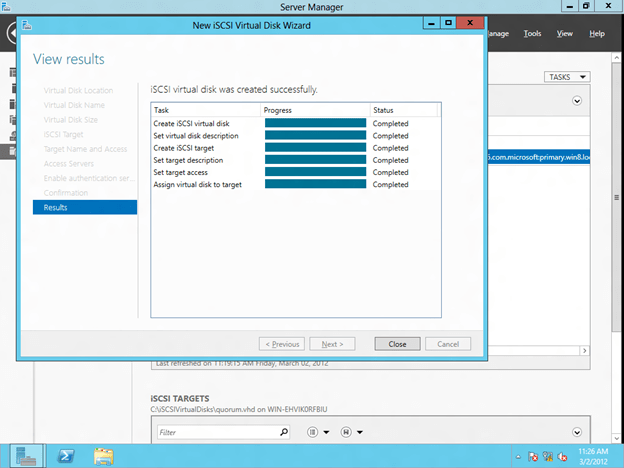

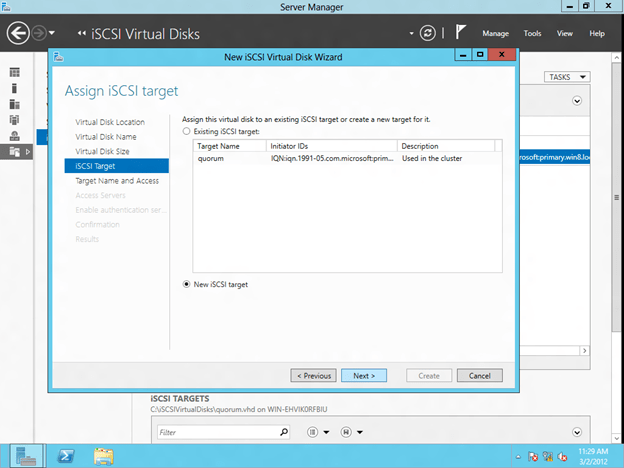

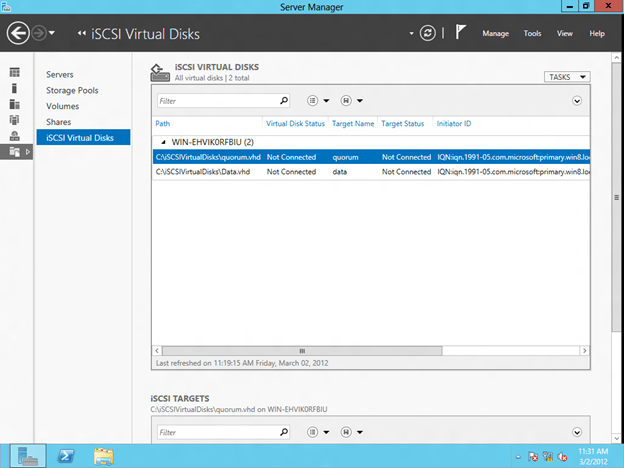

- Configure the iSCSI Target

- Connect to the iSCSI Target using the iSCSI Initiator

- Format the iSCSI Target

- Connect to the shared iSCSI Target from the SECONDARY Server

- Configure Windows Server 8 Failover Clustering

The article consist mostly of screen shots, but I also add notes where needed.

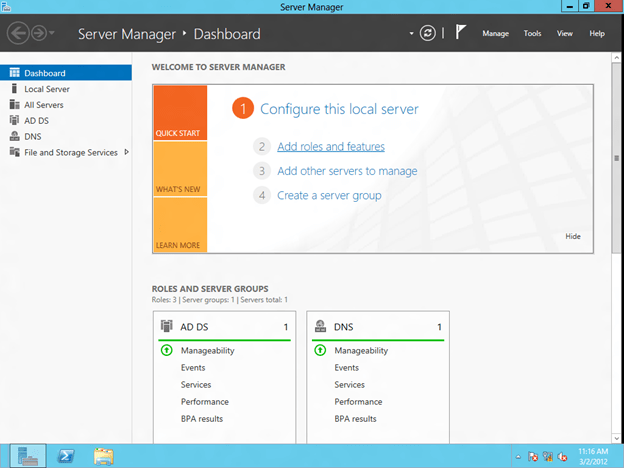

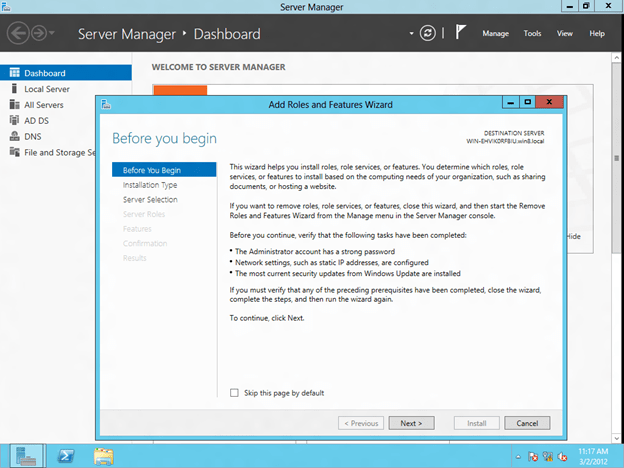

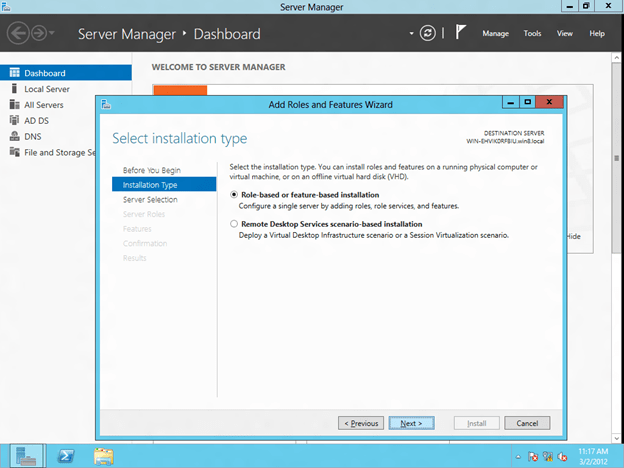

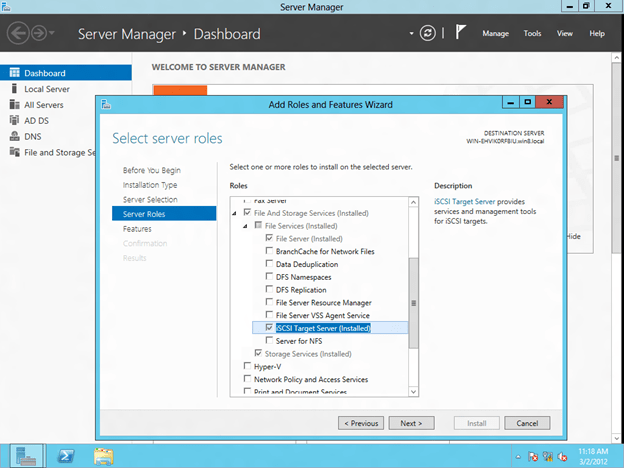

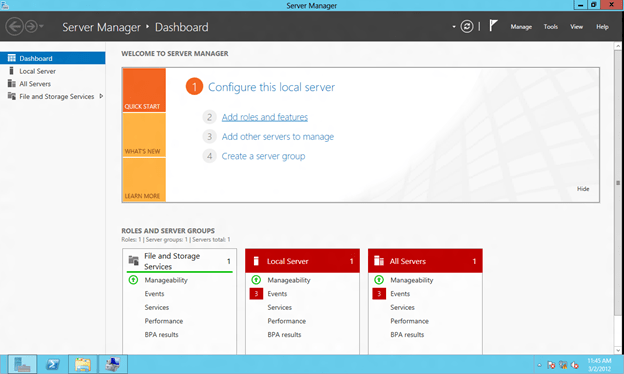

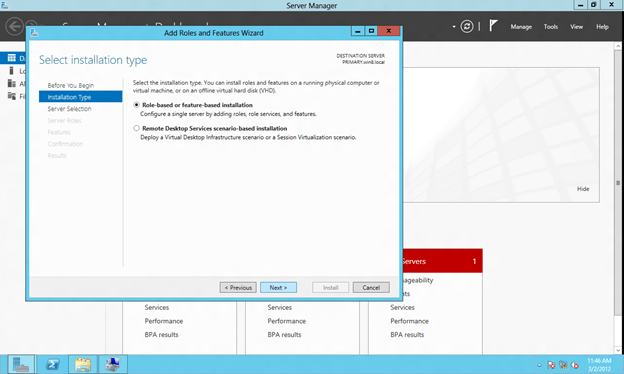

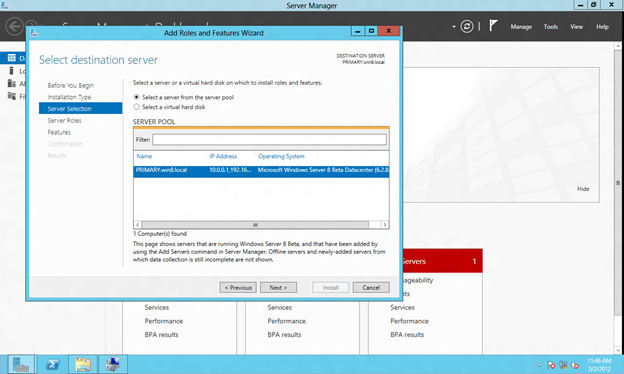

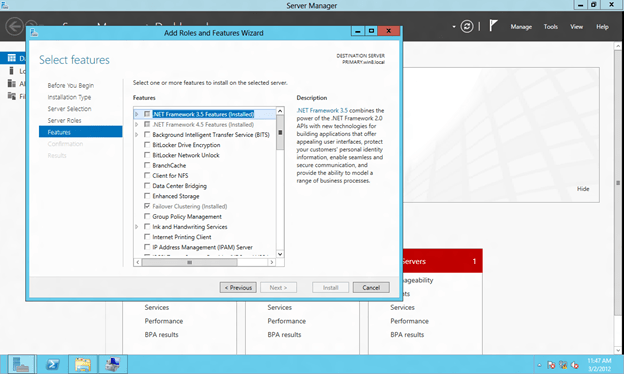

Install the iSCSI Target Role on your Domain Controller

Click on Add roles and features to install the iSCSI target role.

You will find that the iSCSI target role is a feature that is found under File And Storage Servers/File Services. Just select iSCSI Target Server and click Next to begin the installation of the iSCSI Target Server role.

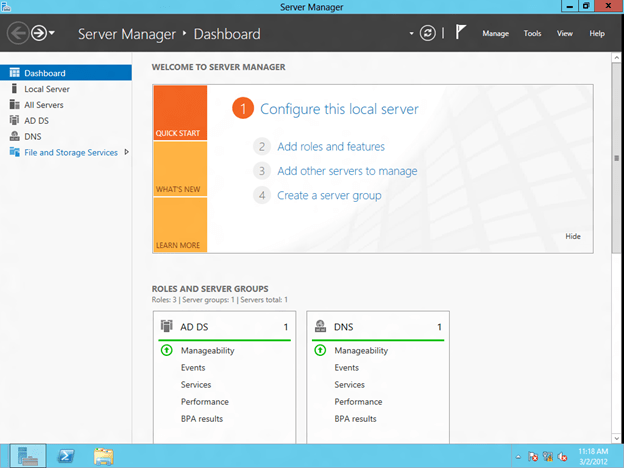

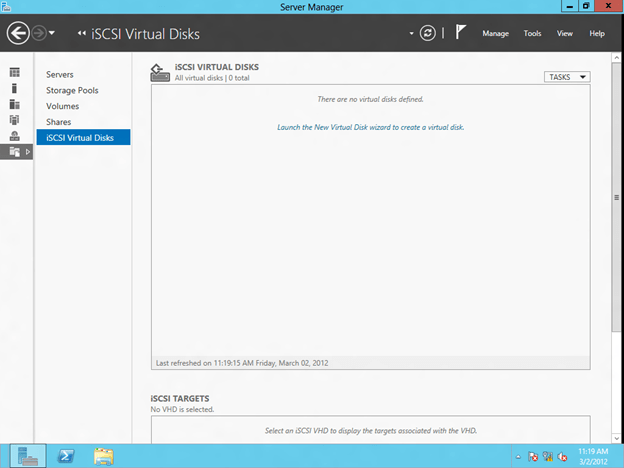

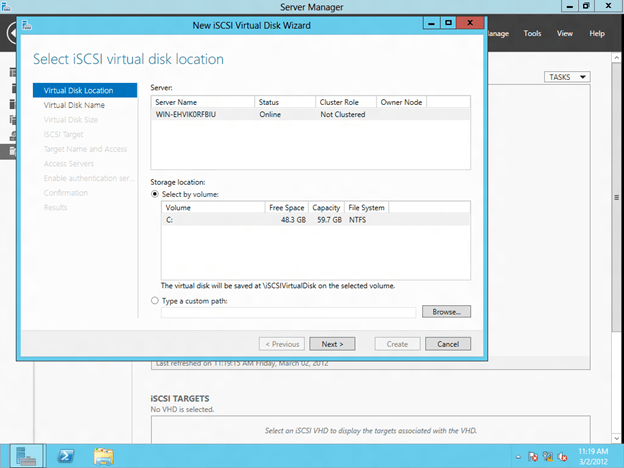

Configure the iSCSI Target

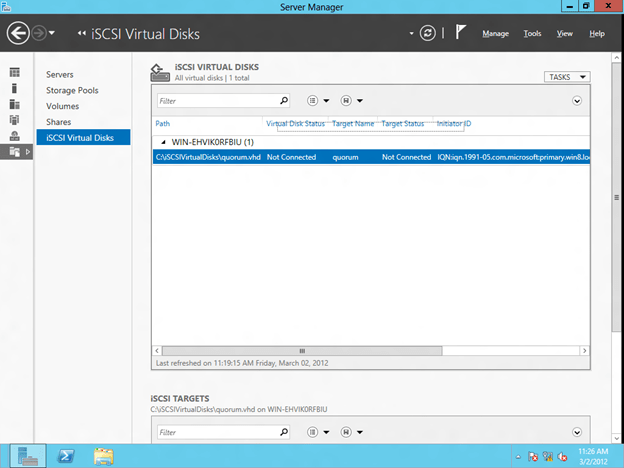

The iSCSI target software is managed under File and Storage Services on the Server Manager Dashboard, click on that to continue

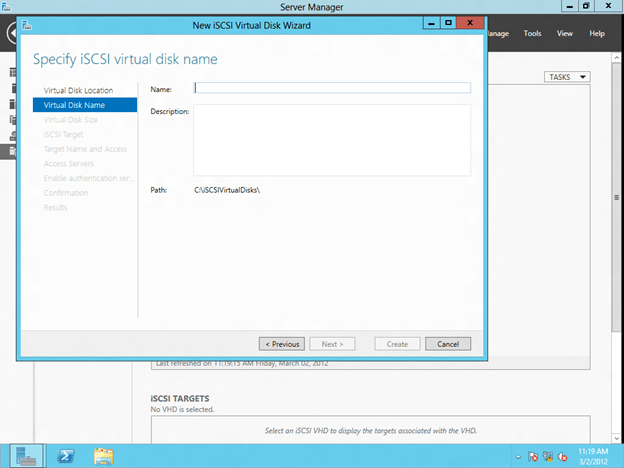

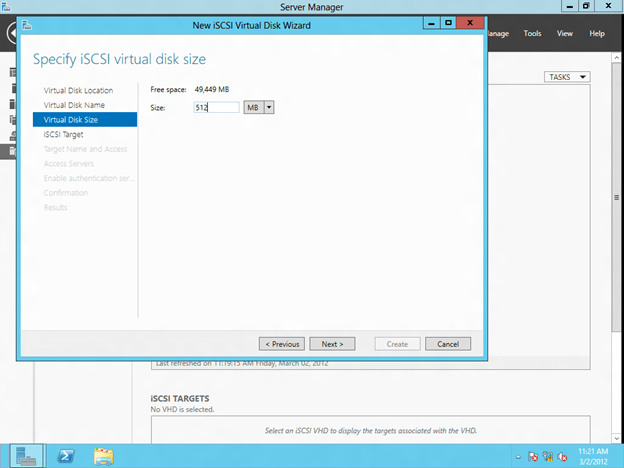

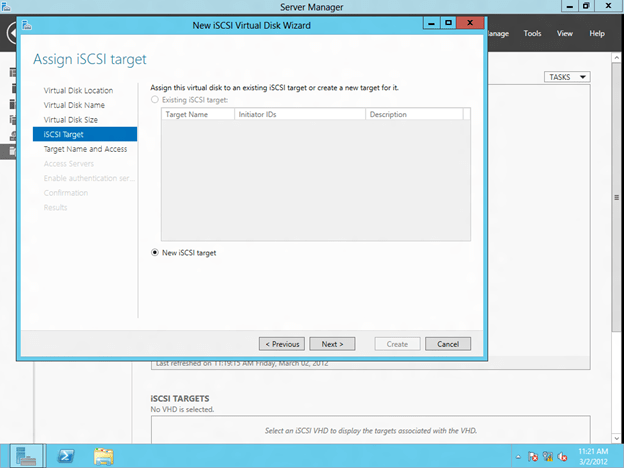

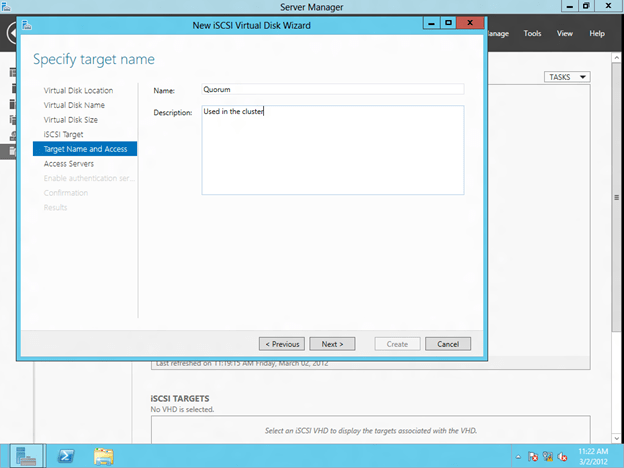

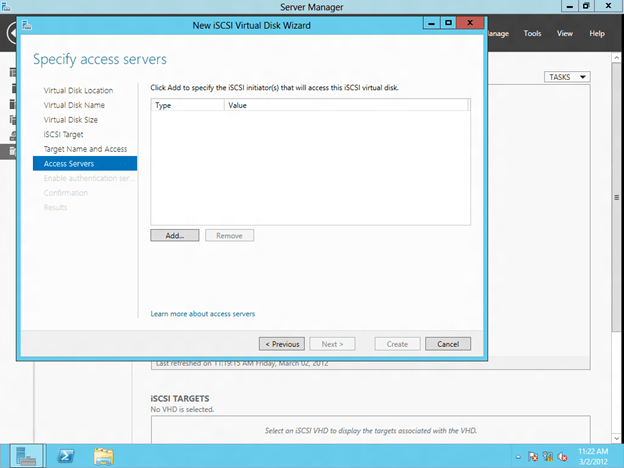

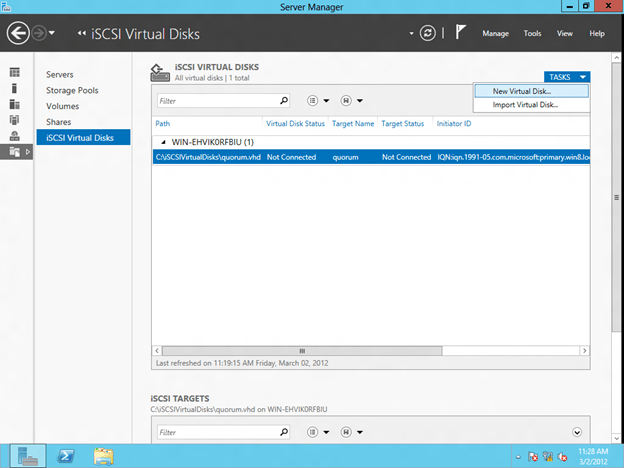

The first step in creating an iSCSI target is to create an iSCSI Virtual Disk. Click on Launch the New Virtual Disk wizard to create a virtual disk.

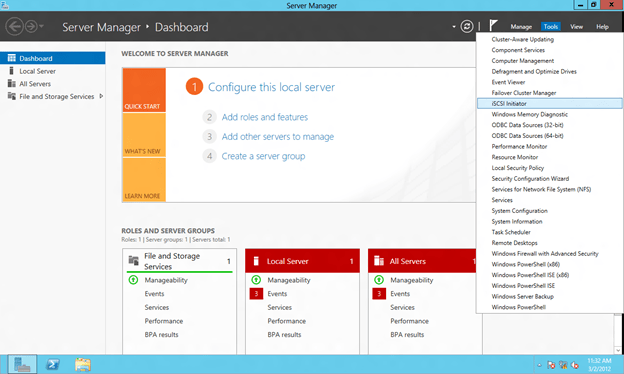

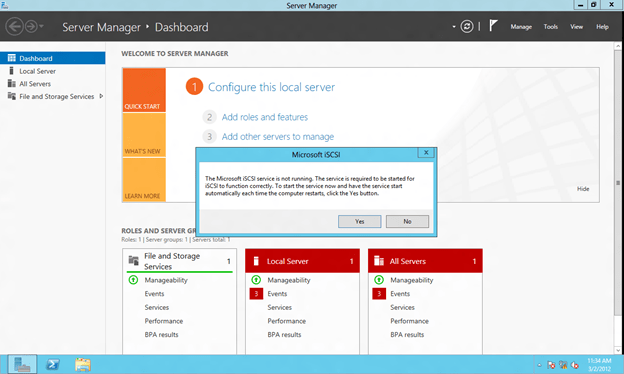

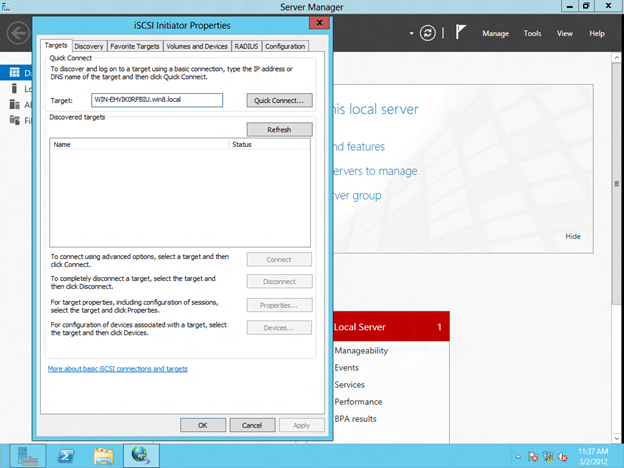

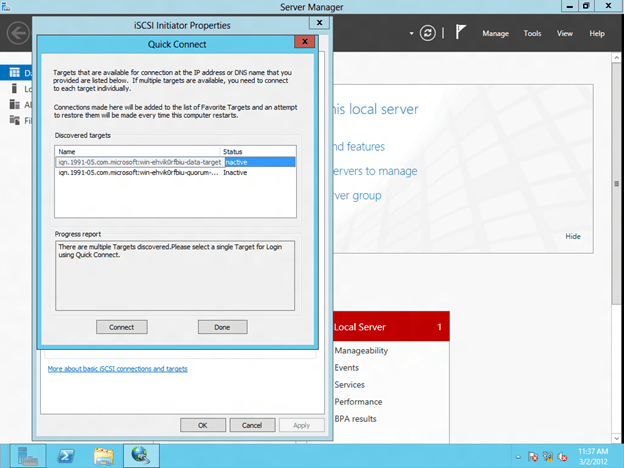

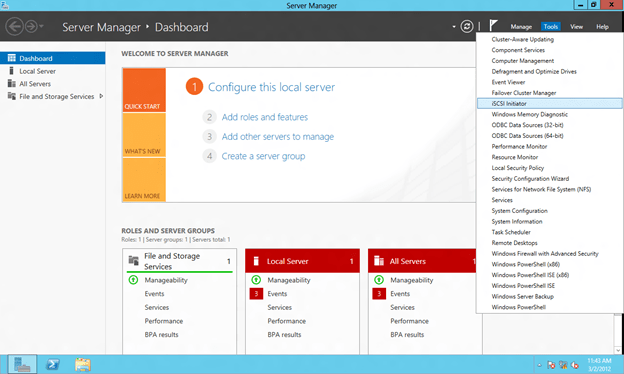

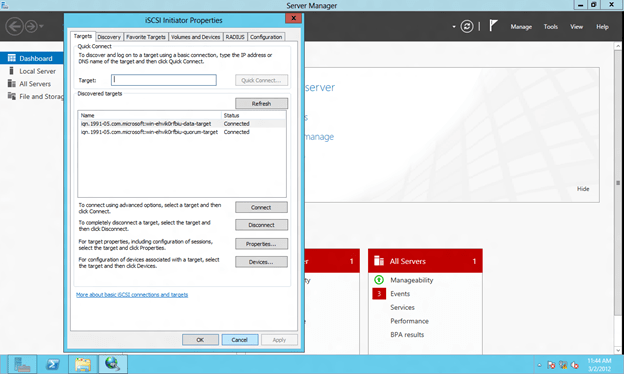

Connect to the iSCSI Target using the iSCSI Initiator

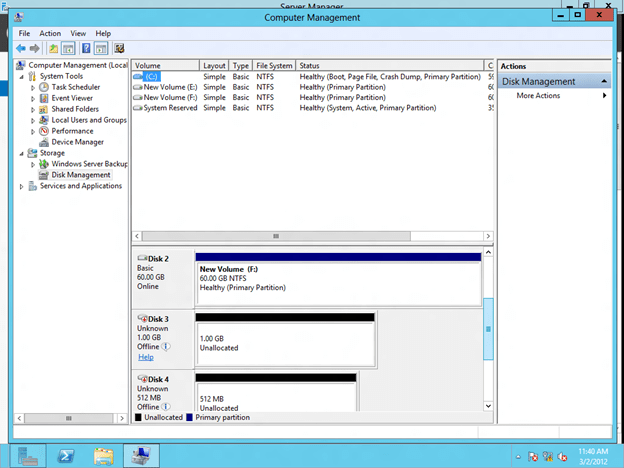

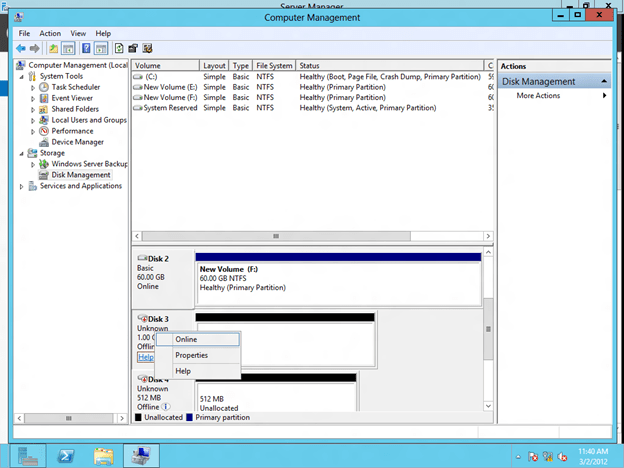

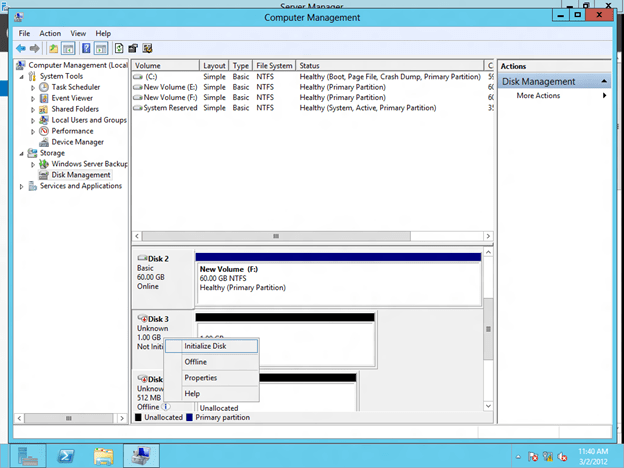

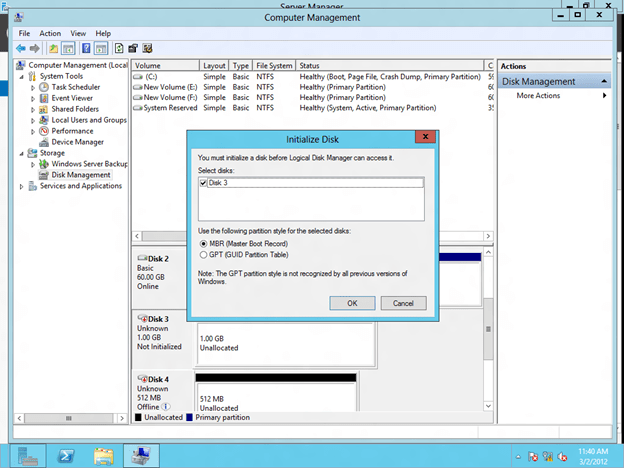

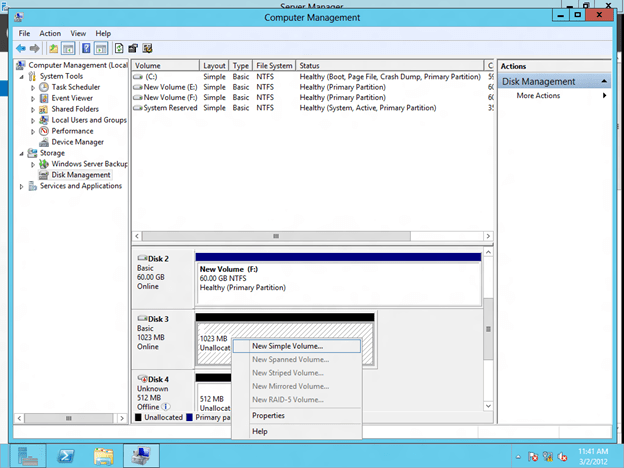

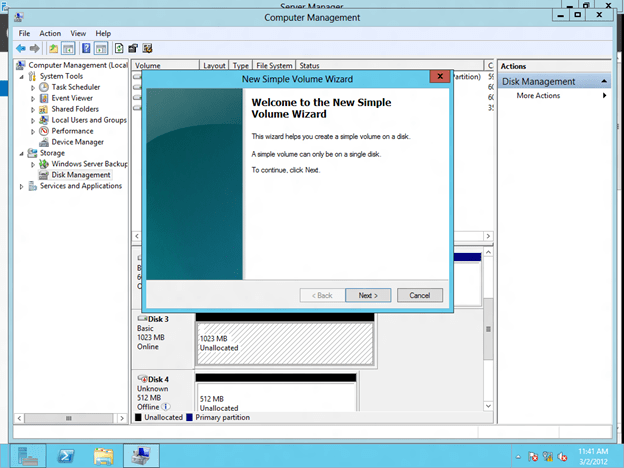

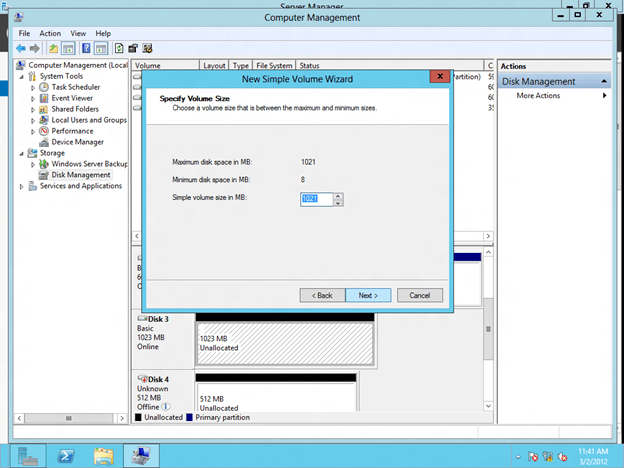

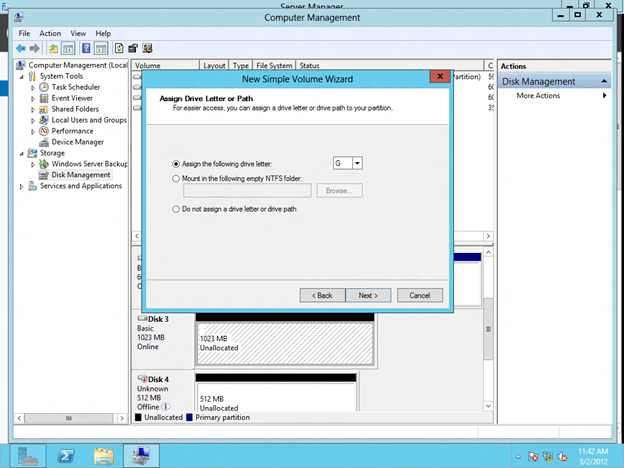

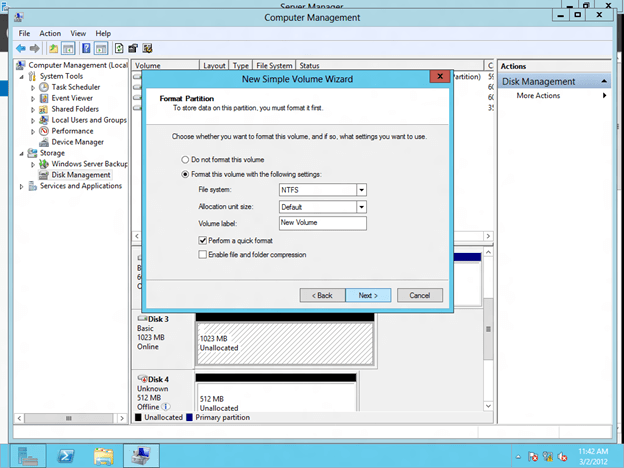

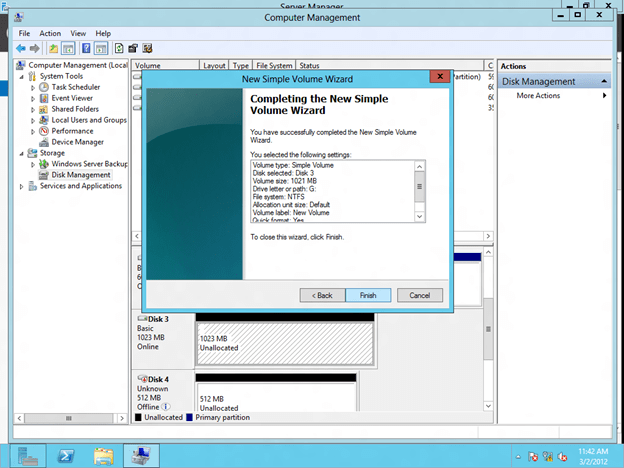

Format the iSCSI Target

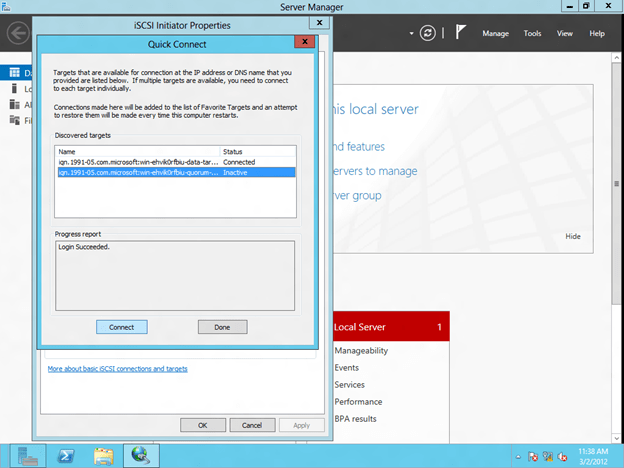

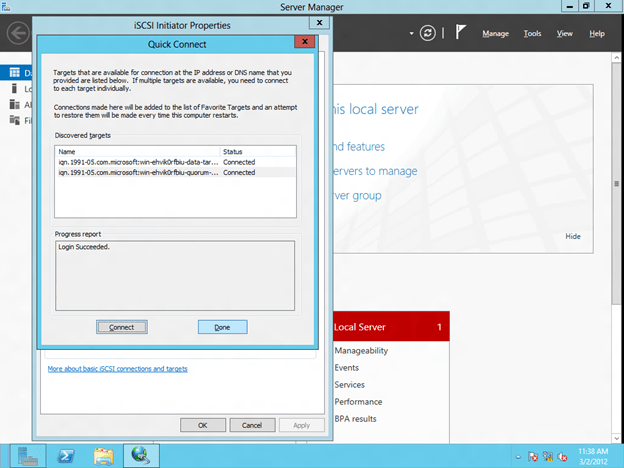

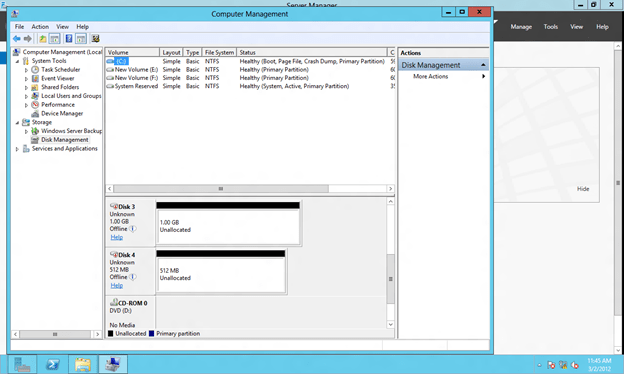

Connect to the shared iSCSI Target from the SECONDARY Server

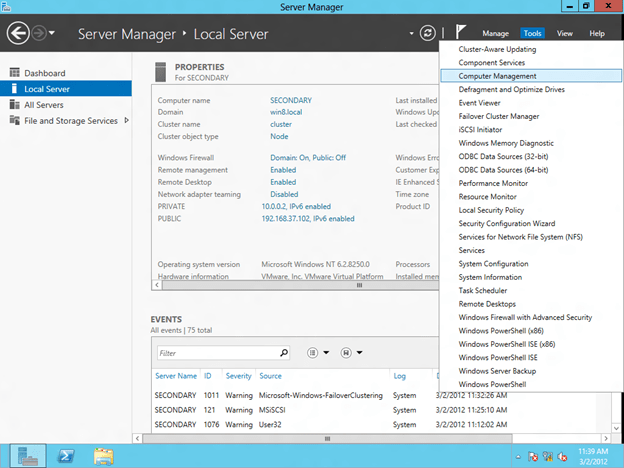

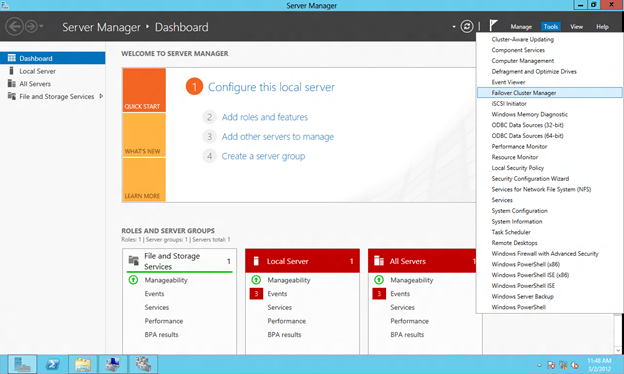

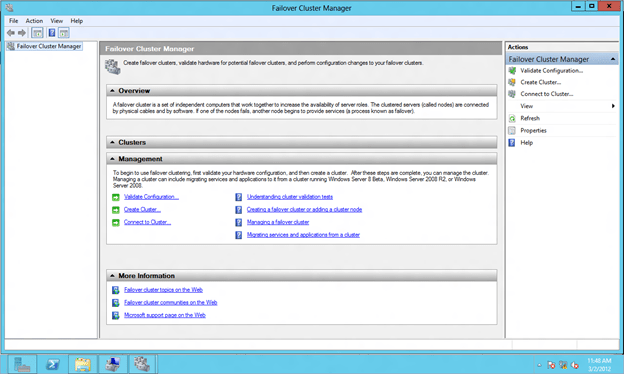

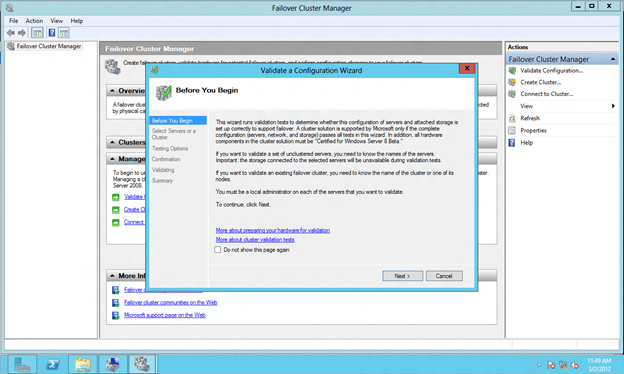

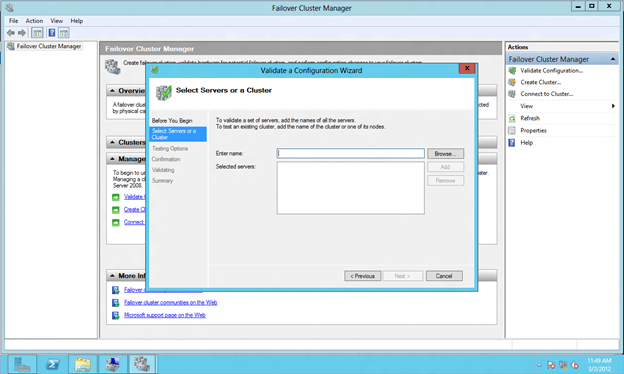

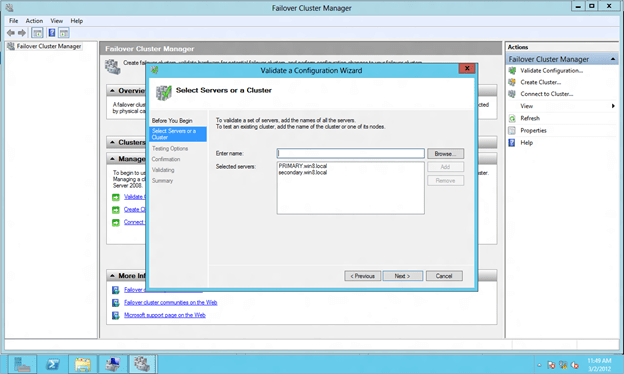

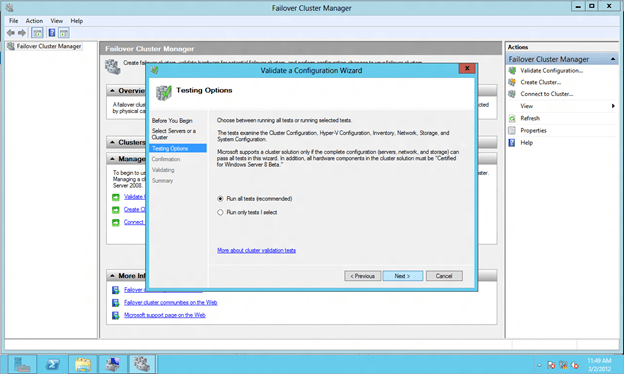

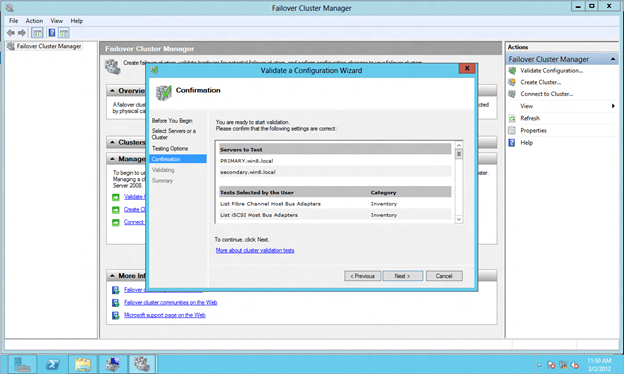

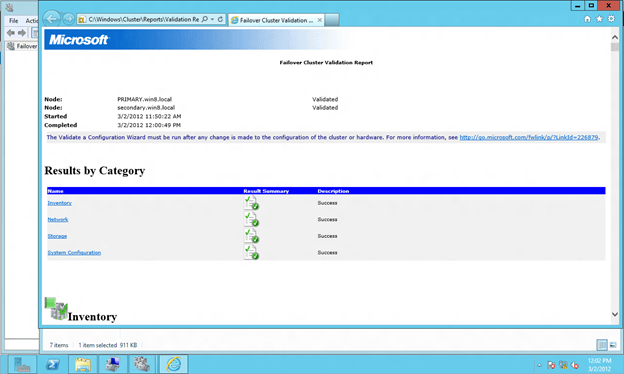

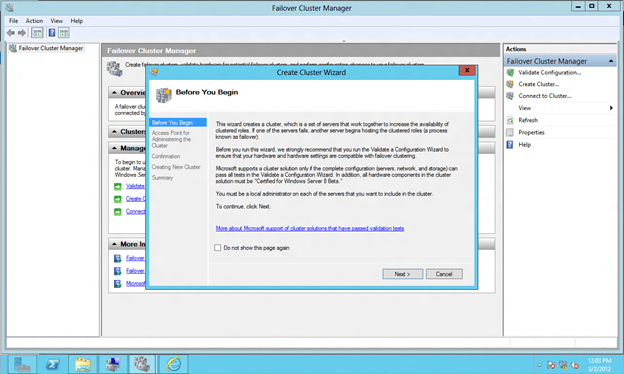

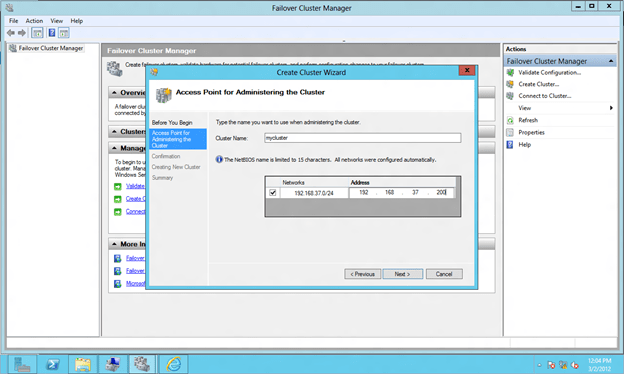

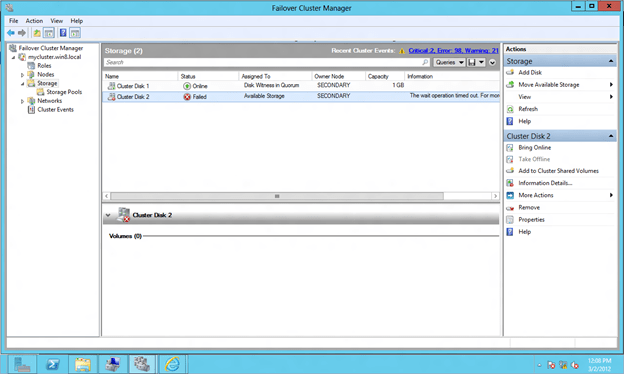

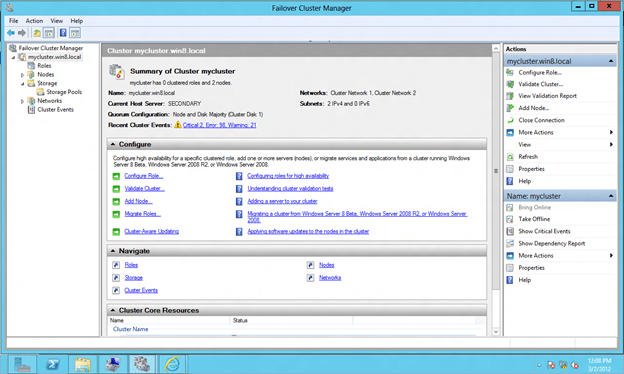

Configure Windows Server 8 Failover Clustering

First off, Awesome Guide!

2nd, I was wondering if you could help me with a problem. When i get to the part in the iSCSI initiator, i get “No targets available for login using Quick Connect” and sometimes “connection failed”. I have all the firewalls disabled…. have any tips?

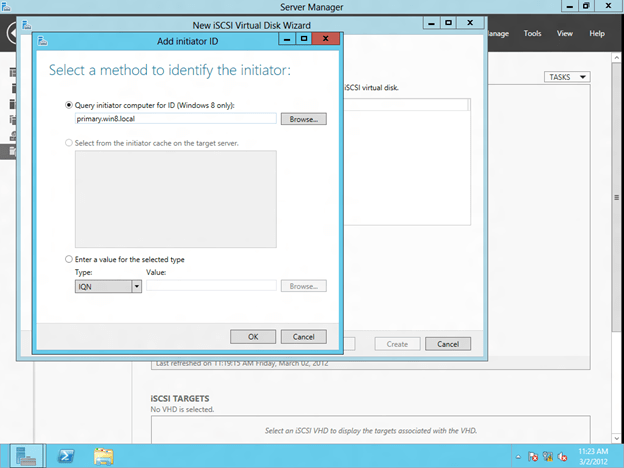

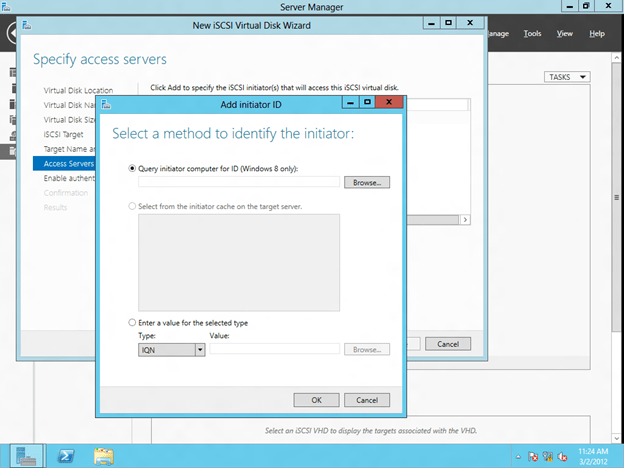

Hmmm…I would have to assume you have your iSCSI targets configured improperly or there is some sort of basic IP connection problem. Maybe try recreating the iSCSI targets and this time use the IP address in the part where it says “Select the Initiator ID”; instead of quering for the IQN, you can type in the actual IP of the initiator box as well. I have had “issues” with using the IQN in earlier versions, but it seems to be working fine for me in the beta.

Try that and let me know; if needed I may be able to do a Team Viewer session to have a look if you would like.

Related but unrelated question. First off, thanks for your blog. I’ve hit it numerous times to help with various clustering issues, you definitely deserve the MVP.

Now that that’s out of the way, I’ve made a SQL2008R2 cluster on W2008R2. This cluster sits in Amazon’s EC2. I have another server (I’m using the Amazon VPC model) that I need to install reporting services on. I am able to ping the individual node name and get a response. I don’t get a response from the cluster name or IP address. If I try to connect to the individual node, I get the named pipe error that indicates that the pipe is not configured for that name. I check the settings, and named pipes is configured for the cluster name.

So what gives? Why can I access the individual node of the cluster (this cluster only has one node, for now, and a disk quorum) but not the cluster instance itself?

Help. Please. 🙂

Thanks for the kind feedback. So you are saying that you can’t ping any of the Cluster IP addresses remotely? The management IP? The SQL Computer Name resource? How about locally on the active node, can you ping the cluster computer name and IP address? I would throw this out in the Microsoft forums as well so yu can get other people thinking about it; I don’t have a quick answer at the moment.

http://social.technet.microsoft.com/Forums/en-US/winserverClustering/threads

Replying inline:

1. I can’t ping any of the cluster IPs remotely. By IP or by name.

2. Locally on the active node, I CAN ping the cluster names and IPs.

3. I also just confirmed that other servers on the SAME subnet as the cluster CANNOT ping the cluster names or IPs. (but pinging the node IP or name resolves without issue)

Also, thanks. I’ll throw the question up over there as well.

hi, awesome blog

question: there is no need to formate the second node “node” volume in device manager? this must only be done on the first node?

THat is right, in a shared storage cluster the storage only needs to be formatted once.

I have a constraint in this deployment. If your iscsi target server fails (Here is Domain controller)what would be the database cluster status and also it’s applicable for production environment.

If you want to deploy this in production I would highly recommend you cluster your iSCSI Target Server using DataKeeper CLuster Edition to keep the local disk in sync between the two cluster nodes. This eliminates the iSCSI Target Server as a single point of failure.

Hmm, I hear this a lot about the iscsi target server being a single point of failure, However I’m not sure I agree entirely as wouldn’t most of us be wise enough to have the iscsi target server VM instance sitting on a RAID-10 array?

Of course the more redundancy you build in your storage, network, server, power, etc., the less likely it is to fail. But ultimately if all this hardware sits in the same rack/building/city it still is a single point of failure. You just have to decide how much redundancy is enough and make sure you cover all your bases.