SQL Server 2008 multi-site cluster on Windows Server 2008 R2

In Part 1 of this series, I showed you how to prepare your multi-site cluster, including setting up the Node and File Share Majority Quorum. In Part 2, we saw a video example of how to integrate SteelEye DataKeeper, one of the available cluster replication solutions, with failover clustering to configure a multi-site Hyper-V failover cluster. In Part 3, we will examine how Microsoft SQL Server 2008 can be configured in a multi-site cluster with Windows Server 2008 Failover Clustering and SteelEye DataKeeper Cluster Edition.

First, why would you want to deploy a multi-site SQL server cluster? SQL Server comes with a variety of high availability options, including log shipping, replication (transactional, merge, snapshot), database mirroring and failover clusters. There is a great article that talks about the High Availability options in SQL Server 2008, so I won’t re-invent the wheel here. If you want to learn more about all of the options, have a look at this article by Ross Mistry, High Availability Options in SQL Server 2008.

If you read the article, Ross’ summary makes the following statement…

“Clearly, failover clustering is the best choice to reduce system downtime and provide higher application availability for the whole instance of SQL Server within a site; however, a failover cluster does not protect against disk failure and requires a SAN.”

What if you could have all of the benefits that Ross outlines and also protect against disk failure and eliminate the need for a SAN? Well, the good news is that is exactly what you can do by implementing a Microsoft SQL Server 2008 multi-site cluster on Windows Server 2008. SQL Server 2008 multi-site cluster may be a little bit of a misnomer. It does not necessarily need to be deployed across geographically dispersed locations. The servers could be located in the same rack, same room, same building or across continents; it really doesn’t matter. The important thing is that you are eliminating your storage as a single point of failure and if you choose to locate your cluster nodes in different physical locations then you also have built in site resiliency.

One of the most common questions and points of confusion about SQL Server 2008 and Windows Server 2008 failover clustering is support for failing across subnets. Yes, Windows Server 2008 Failover Clustering does support failing between subnets for most applications, however, SQL Server 2008 is not one of those applications. As far as I know, SQL Server 2008 R2 will also not support failing between subnets when it is released. My understanding is that the SQL team is working on support for cross-subnet failover, but it will be supported sometime after SQL Server 2008 R2 is released. So, for the time being, you will have to span your subnet if you wish to separate your nodes geographically.

Now that you have determined to deploy a multi-node SQL server cluster, here are the steps you will need to follow.

Configure you basic cluster

Follow the steps in Part 1 of this series to configure a basic 2-node cluster with a Node and File Share Majority Quorum.

Configure your replication

Deploying a multi-site cluster will require that you work very closely with your replication vendor during this part of the configuration. Each vendor will have very specific instructions on how to set up the replication and create the storage resources to be used in your cluster. For the purposes of this demonstration, I will use SteelEye DataKeeper Cluster Edition to replicate the E drive from the server named PRIMARY to the server named SECONDARY. The E drive on PRIMARY and SECONDARY are just local attached volumes and not part of any SAN. As long as the E drive shows up as a local attached disk, DataKeeper can replicate it from one server to another. You can mix and match SAS, iSCSI, SAN, VHD, or any other storage type as DataKeeper is storage agnostic.

After you have configured your basic cluster as described in Part 1 of my series, run the DataKeeper setup.exe to install SteelEye DataKeeper Cluster Edition on both nodes of the cluster. You will be prompted to enter your license key at the end of the install. Enter your license and reboot your servers when prompted. You are now ready to create your mirror. Launch the DataKeeper GUI and follow the instructions below to create your mirror.

Click on “Connect to Server”.

Figure 1 – Connect to your source and target servers

Enter the name of the first server. Click Connect.

Enter the name of the second server, click Connect

Now click Create Job. That will launch the Create Job wizard.

Figure 2 – Create your Job

Give your job a name and description. These can be anything you like.

Figure 3 – Give your Job a name and description

Choose the following:

- Source Server – where the data will be replicated from

- Network adapter – the network where the replication traffic will travel

- Volume – the volume you want to replicate

Click Next to continue.

Figure 4 – Choose you source server and network to use for replication

Choose the following:

- Target Server – where the data will be replicated to

- Network adapter – the network where the replication traffic will travel

- Volume – the volume you want to replicate

Click Next to continue.

Figure 5 – Choose your target server

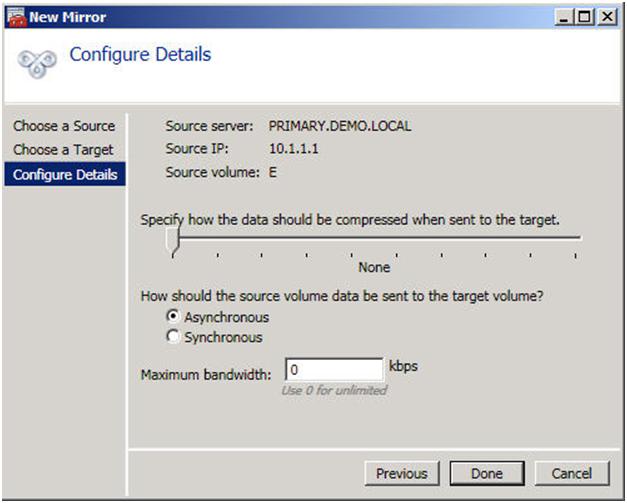

Choose the following:

- Compression Level – If you have a 100 Mbps or faster network for replication, leave it set to none. If you have a WAN that is less that 100 Mbps, you may benefit from enabling compression. Settings somewhere in the middle tend to give you the best performance of compression vs. CPU overhead associated with enabling compression.

- Asynchronous vs. Synchronous – Asynchronous replication is generally acceptable in most environments and is definitely required in high-latency WAN environments. I wrote a whole blog post on this topic, so if you have any questions, check out my article on Asynchronous vs. Synchronous replication.

- Maximum bandwidth – you can think of this as a “poor man’s” QOS. If you want to ensure that replication never exceeds a certain threshold of your WAN capacity, you can put a limiter on the amount of bandwidth it can consume. Unless you have a good reason to set it, it is better off leaving it set to 0.

Click Done to create your mirror.

Figure 6 – Choose your mirror settings

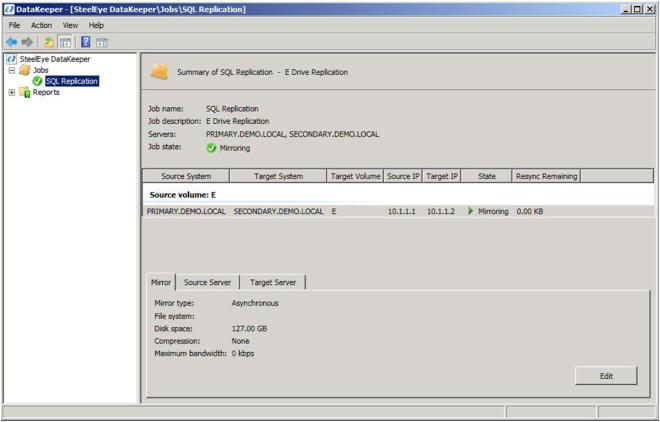

Now if you take a look at your DataKeeper GUI, it will look similar to the following.

Figure 7 – Your mirror is now created

Once you have created your mirror, you need to make your mirror available in the Microsoft Cluster “Available Storage”. There are a few ways to do this, but the most straight forward way is to use the Windows PowerShell CLI. Below is an example that shows how to take the existing mirror we just created on the E drive and add it to the cluster “Available Storage”, move it to the PRIMARY node and bring it in-service

Import-Module FailoverClusters

Add-ClusterResource -Name “DataKeeper Volume E” -ResourceType “DataKeeper Volume” -Group “Available Storage”

Get-ClusterResource “DataKeeper Volume E” | Set-ClusterParameter VolumeLetter E

Move-ClusterGroup “Available Storage” -Node primary

Start-ClusterResource “DataKeeper Volume E”

For more information on PowerShell and the available commands for use with Failover Clustering, check out this great blog post from Symon Perriman of the Microsoft Failover Clustering Team.

http://blogs.msdn.com/clustering/archive/2008/12/20/9243367.aspx

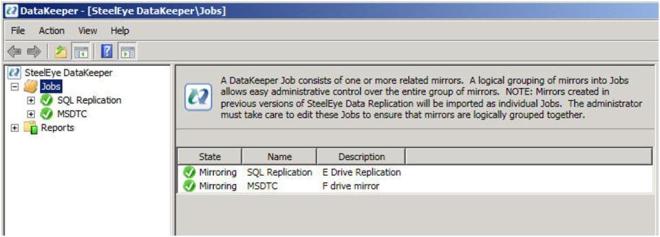

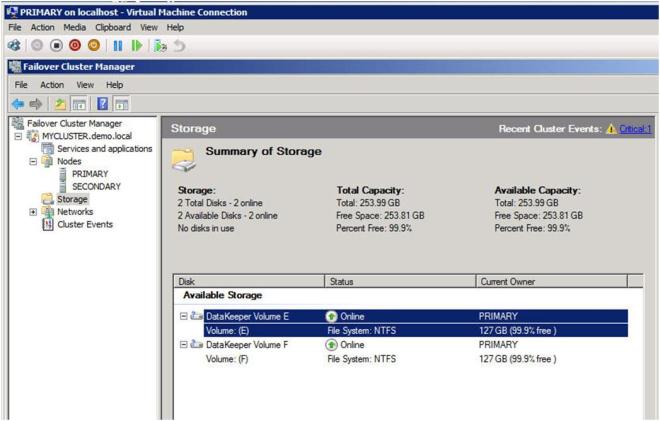

You are now going to repeat the above steps to add any additional mirrors that you will use in your cluster. In our case, we are going to create a mirror of the F drive and use it to cluster the MSDTC. After you have added your additional mirrors and added them to Available Storage, your DataKeeper GUI should look something like this.

Figure 8 – After adding the second Job for the MSDTC resource

And your Failover Cluster Manager GUI should look like this.

Figure 9 – After adding the second DataKeeper resource

Clustering MSDTC

IMPORTANT NOTE – There is a hotfix that is required in order to support DTC with 3rd party disk resources. Please see the following KB article and apply the howfix to all cluster nodes. http://support.microsoft.com/kb/978476

SQL 2008 is very dependent upon MSDTC, so it is highly recommended that you cluster the MSDTC resource before you cluster your SQL resource. The following articles are provided for your reference for configuration and management of your MSDTC resource.

http://technet.microsoft.com/en-us/library/cc770748(WS.10).aspx

http://technet.microsoft.com/en-us/library/cc771540(WS.10).aspx

You will start by opening the Failover Cluster Manager GUI and then choose “Configure a Service or Application”.

Figure 10 – Creating a MSDTC resource

You will then choose “Distributed Transaction Coordinator” and click Next

Figure 11 – Choose your Service or Application

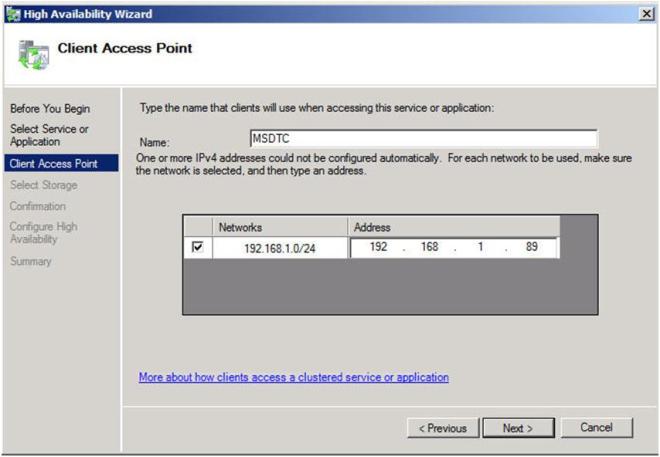

Give the MSDTC resource a name and unique IP address. These should be unique to MSDTC and not the same as you will use later when you create your SQL resource.

Figure 12 – Choose a name and IP address

Choose the volume where you will store the data for the MSDTC resource. In our case we are choosing the replicated F drive.

Figure 13 – Choose a dedicated volume for the MSDTC resource

Confirm your information and click Next to continue.

Figure 14 – Click Next to configure the DTC resource

Congratulations, you have succesfully configured the DTC resource. Click Finish.

Figure 15 – A successfully configured DTC resource

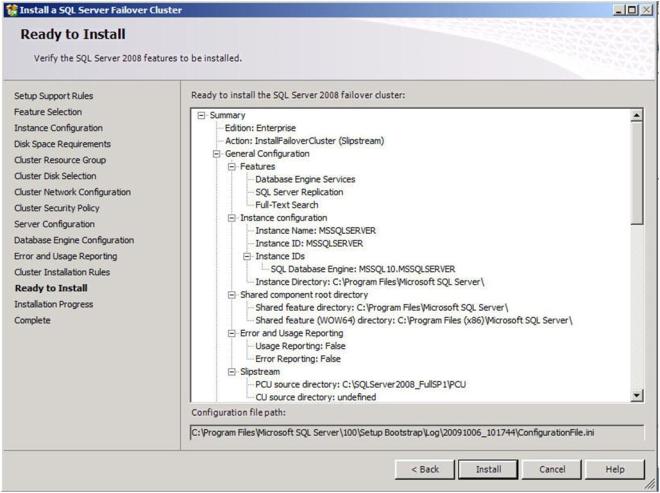

We are just about ready to begin the installation of the first node of the SQL Server Cluster, however, there is one thing we need to do in preparation – Slip Stream SQL 2008 SP1 onto the SQL Server 2008 RTM install media.

Slip stream SQL SP1 onto your SQL 2008 install media

What I have discovered is that SQL Server 2008 will not install on Windows Server 2008 R2 without first slipstreaming SQL Server 2008 SP1 onto your SQL 2008 install media. Here is a great article that describes how to slipstream SQL Server 2008 RTM and Service Pack 1. After I read that article and successfully slipstream SP1 onto SQL 2008 RTM, I found the following Microsoft KB article that describes the same procedure. You may get an error that looks like the following if you try to install SQL without first slipstreaming SP1 onto the media.

There was an error setting private property ‘RequireKerberos’ to value ‘1’

I followed the instructions detailed in the first article and copied my SQL 2008 with SP1 install to the C:\ drive of both nodes in my cluster. In the instructions below, I will do the installation from the local disk of each cluster node.

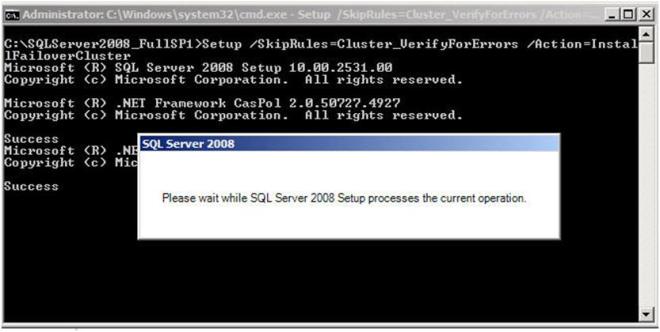

Installing your first cluster node

Now that you have your SQL Server 2008 SP1 installation media ready to go, you are ready to install your first SQL node. There is one major “gotcha” when it comes to installing SQL on a multi-node cluster. In order for you to install SQL on a multi-node cluster, you must first pass the Windows Server 2008 Failover Cluster validate process. Unfortunately, a multi-site cluster is exempt from passing the storage related test, so you never are able to actually “pass” the validation as far as SQL is concerned. It took a little investigation on my part, but what I have come to find is that there is a command line parameter that allows you to skip the validation test on the SQL 2008 installation. Here is the command line.

Setup /SkipRules=Cluster_VerifyForErrors /Action=InstallFailoverCluster

To launch the SQL setup, open a Command window, browse to your SQL 2008 with SP1 install directory and type the command as shown below.

If everything goes as planned, you should see the screen below. Click OK to continue.

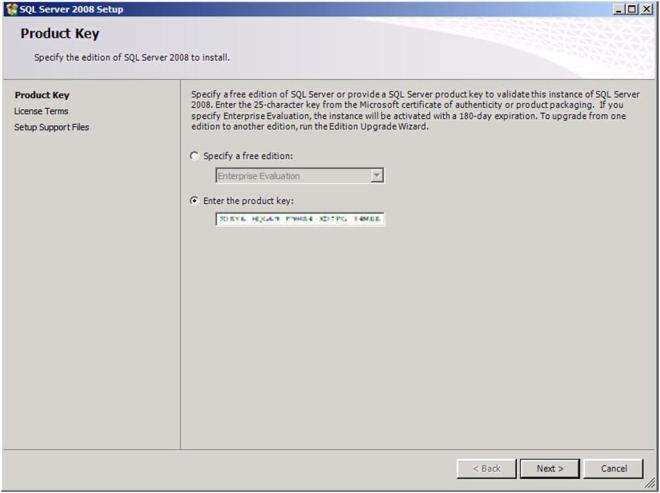

Enter your product key and click Next

Figure 17 – Enter your product key

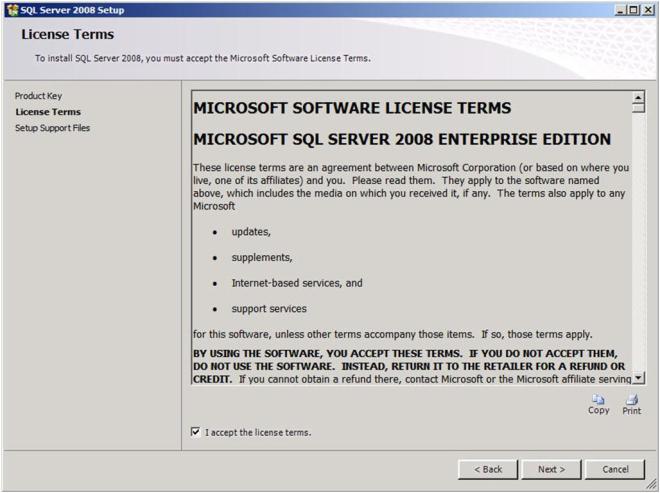

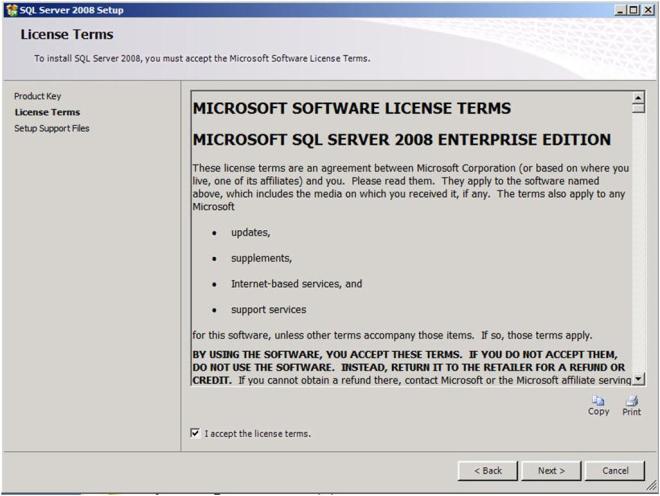

Accept the license agreement and click Next

Click Install to install the Setup Support Files

At the end of the Setup for the Support Files you will receive a warning. Click on Show details and you will see the message below. You can click Next, ignoring this message since it is expected in a multi-site or non-shared storage cluster.

Figure 18 – The warning is expected in a multi-site or non-shared storage cluster

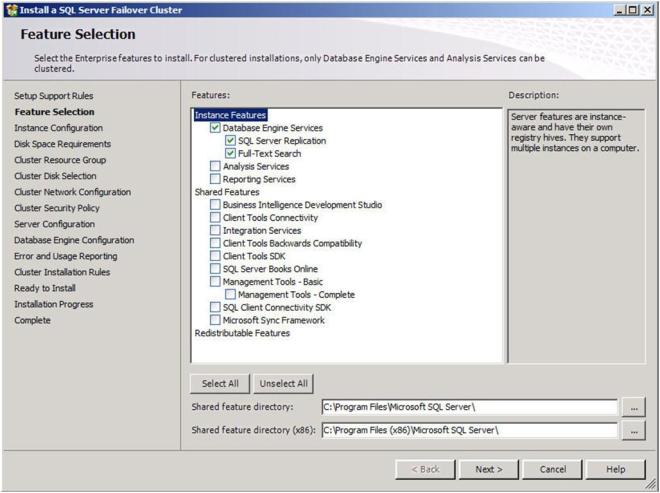

Choose the features you would like to install and click Next. Leave the “Shared Feature” directory set to the C drive as the SQL binaries should not be installed on the replicated volume.

Figure 19 – Choose your features

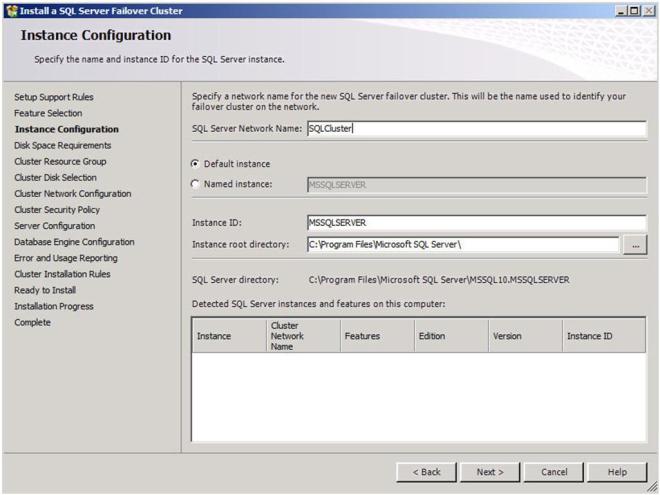

On the next screen, you will choose a network name for your SQL Server. This will be the name that all of the clients will connect to. Assuming this is the default instance, leave the Instance ID and Instance root directory set to the defaults. Do not move the instance root directory to the replicated volume.

Figure 20 – Choose your SQL Network Name

It is recommended that you have separate domain accounts for SQLServer and SQLAgent. You can take the time to create these accounts now if you have not already done so. These accounts require no special permissions, as this install process will give them the permissions that they require.

Confirm you have enough disk space and click Next to continue.

Choose the default settings to allow a new cluster resource group named “SQL Server (MSSQLSERVER)” to be created.

Figure 21 – Allow the wizard to create a new cluster resource group for you

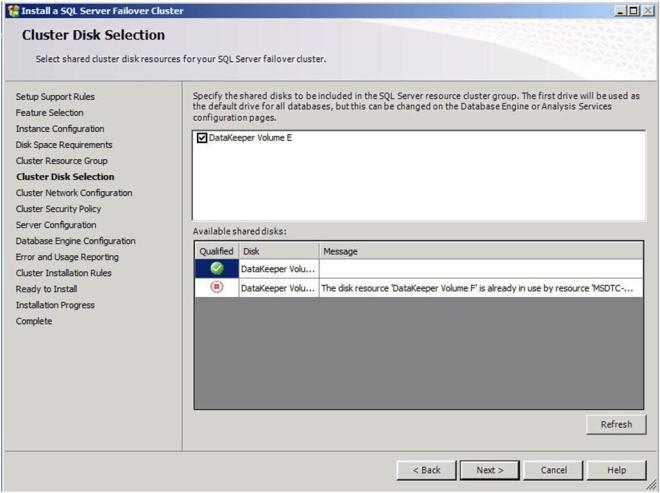

Choose a replicated volume that is still available, in our case the E:\ drive. Click Next to continue.

Figure 22 – Choose your replicated volume

Now you will choose the IP address you want associated with the SQL cluster resource. You could leave it set to use DHCP if you wish.

Figure 23 – Choose your SQL cluster IP address

Choose your security settings and click Next

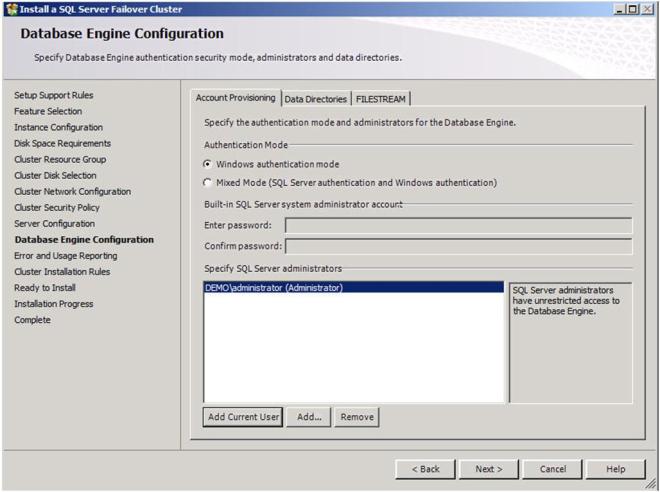

Add any SQL Server administrators and choose your authentication mode and then click Next

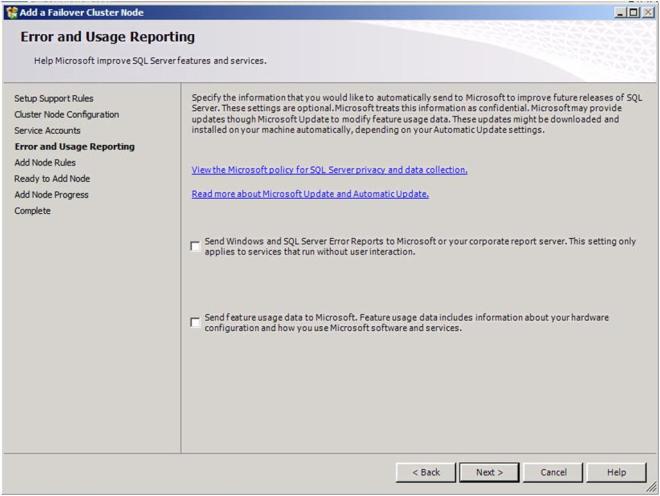

Choose your Error and Usage Reporting options and click Next

You will once again see some warnings related to the validation process. You can ignore those messages as they are to be expected in a multi-site SQL Server cluster.

Click Install to begin the installation process

If everything installs as expected, you should see the following screens. Click Next then Close to finish the installation.

Congratulations, you have successfully installed the first node of your multi-site SQL Server Cluster. Now we will install the second node of the cluster.

Install the second cluster node

Installing the second cluster node is similar to installing the first node. We need to run SQL Setup once again from the command line so that we can skip the Validate process. So, from the 2nd node, open up your command prompt and run the following command.

-

Install SQL 2008 SP1 (merged setup) on the 2nd node using the following command:

Setup /SkipRules=Cluster_VerifyForErrors /Action=AddNode /INSTANCENAME=”MSSQLSERVER”

*Note: This assumes you installed the default instance on the 1st node

That will launch the install wizard as shown below. Click OK to continue.

Enter your product key and click next.

Accept the license terms and click Next

Click install to install the Setup Support files.

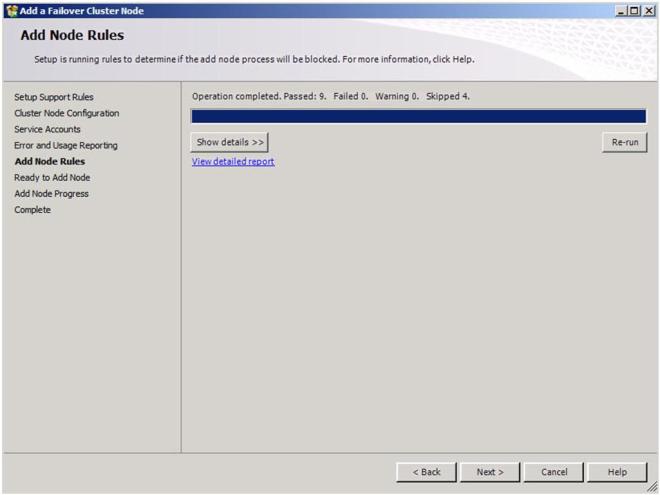

You can once again ignore the warning that some cluster validation tests have been skipped. This is to be expected in a multi-site cluster and non-shared storage clusters.

Verify you are adding the node to the righ instance and click Next.

Add the passwords to the service accounts and click Next

Choose your Error and Usage Reporting options and click Next

Once again, ignore the warning about the skipped operations.

Click Install to begin the installation process.

If everything goes as expected, you should see the following screen.

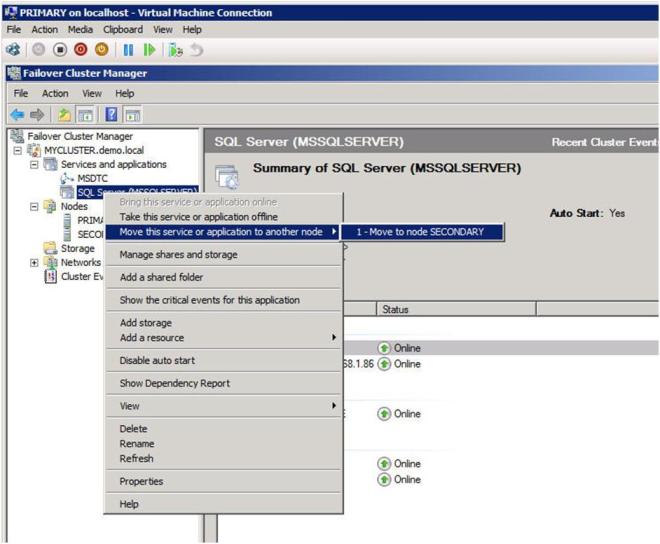

Now that you have a fully functional two node cluster, you probably should testing things out by doing some manual switchovers. Right click on the resource and choose “Move to node SECONDARY”.

If everything is configured properly, your Failover Cluster GUI should look as follows.

Conclusion

I believe that SQL clusters with replicated storage make a lot of sense. Storage has always been a single point of failure of traditional clusters. You can eliminate that single point of failure by deploying a SQL Server cluster with replicated storage from SteelEye or any other Microsoft Multi-Site Cluster replication partner. I hope you found this article informative. If you have any questions or suggestions, please add your comments!

Hi,

I am having Two SQL Server Sites, having one node on each site and using HP XP24000 as storage solution with Cluster Extension, XP24000 is providing the drives on each node (i.e. replicated drives not shared drives), so I omitted the Storage tests while creating Windows Cluster, and installed SQL Server Failover Cluster on Active node by bypassing the Failover Cluster verifications. SQL Server setup completed on Active node successfully.

But the SQL Server setup fails during “disk qulifications check” when I am trying to add the passive node to the SQL Server Cluster, as the SQL Server Setup investigates the disks and dependent resouces on disks. I have also removed the disk dependcies using Windows Failover Cluster Management and all disks are also in same group.

There is only once difference from your steps of adding node is that I add passed following parameter (mistakenly) while adding the node.

Setup /SkipRules=Cluster_VerifyForErrors /Action=InstallFailoverCluster

Can you please suggest me something on this problem?

I think the command line you used should work. So are you failing on the wizard screen “Cluster Disk Selection”? If so, do you see the disks at all in the “Available shared disks:” panel? If not, then you may have done something wrong in terms of setting up the replicated storage. I am not familiar with the Cluster Extension XP, but it looks like page 100-130 of this manual have the instructions you need to add the XP Resource to your cluster. Unfortunately, if you have not already done this and used a basic Volume resource for your first node, I believe you will want to unistall SQL on the first node and start over fresh. I’m sure HP may be able to give you some guidance if you get stuck.

Thank you for your time.

Let me re-write question for better understanding of problem…

we are having following situation:

– Site A is having Node A, having the network drives (HP XP24000 Cluster Extension) which are only accessible by node A

– Site B is having Node A, having the network drives (HP XP24000 Cluster Extension) which are only accessible by node B

– So we are having multi-site cluster, one node at a site with File Share and node majority (File Share on Third site)

– I was able to create the DTC Service, configured the File witness, and installed SQL Server 2008 FOC on Node A

– HP has configured the replication of disks but disks are not visible to both Nodes

While adding the node B into the FOC of node A, SQL Server Setup check’s the “shared disk check” at “Setup Support Rules” step.

– As its mentioned in multi-site cluster documentation, we don’t need to have shared storage then why does SQL Server Setup is checking up the Shared Disks?

– How can I bypass Shared Disks check?

– Is it because I add mistakenly executed the setup with wrong parameter?

Thanks.

Have you read the manual for your storage? I have not used your storage device in a multi-node cluster, but from what I understand it looks like you need to add the “Cluster Extension XP” resource type to WSFC. It is not 100% clear to me how this plays into the configuration of your cluster, but it looks like it may be dependent upon the Volume resource. Your best bet it to get an HP storage specialist on the phone to help you out

From what you told me, I would expect the results you are seeing. If you assign a volume to NODE1 and a different volume to NODE2 then I would expect SQL add-node to fail because the storage used on NODE1 is not available on NODE2. There has to be some way (provided by HP) to trick SQL into thinking the volume on NODE1 is also available on NODE2. SteelEye does this by replacing the Physical Disk resourc with its own cluster resource that looks and feels like a Physical Disk, but is actually a replicated volume resource that controlls I/O fencing and mirror diection. I am not sure how HP does this. If there is anyone out there reading this who knows, please feel free to comment. Or if anyone wants to send me some free HP storage arrays with Cluster Extension XP, I’d be glad to write up a step-by-step guide for multi-site SQL clusters using HP XP storage 😉

Great article and thanks for the reference.

Ross Mistry

Author – Windows Server 2008 R2 Unleashed and SQL Server 2008 Management and Administration

Twitter @RossMistry

Thanks Ross!

[…] glaube ich habe schon gefunden was ich nicht wollte ….: Step-by-Step: Configuring a 2-node multi-site cluster on Windows Server 2008 R2 ? Part 3 Clustering … Ich bin davon ausgegangen, dass ich die Datenbanken mit Boardmittel replizieren kann. Aber um […]

I read some posts here once in a while but this is best one i’ve read so far

Great article. Just what I needed to help plan a proof of concept.

I do however have one question… in the case of a failover to a remote site, how do you fail back? ie. In this example, your data is synchronised from the primary server to the secondary server, but during a fail over when you are working on the secondary server, how do you get your changed data back to the primary server when you are ready to fail back?

Thanks,

Gareth

Gareth,

In the case of DataKeeper as soon as the failed server comes back online it assumes the role of mirror “target” and a partial resync begins automatically. Basically while it is offline the source of the mirror tracks the changed blocks in an intent log, so when the failed server comes back online just the changed blocks are sent. Once all the changes are sent the mirror goes back to a normal mirroring state and you can then bring the original server back online via the Failover Cluster GUI.

With Windows Server 2008 and above, you can configure each of the various settings related to latency of heartbeat responses and number of missed messages before a location is considered unavailable. The key values you want to tune would be:

•SameSubnetDelay—Frequency heartbeats are sent. Default is one second.

•SameSubnetThreshold—Missed heartbeats before an interface is considered down. Default is five heartbeats.

•CrossSubnetDelay—Frequency heartbeats are sent to nodes on dissimilar subnets. Default is one second.

•CrossSubnetThreshold—Missed heartbeats before an interface is considered down to nodes on dissimilar subnets. Default is five heartbeats.

You can configure these with the cluster.exe command line interface and the Set-Cluster PowerShell cmdlet.

Biggest problem usually involves bandwidth. You just want to make sure your bandwidth can handle your rate of change. Second issue is quorum settings. In order to facilitate automatic failover to the remote location you must deploy an even number of cluster nodes and you must locate your file share witness in a 3rd location.

Dave

Very good series. Question, What is the main difference between installing SQL Server 2008 multi-site cluster and installing one instance of SQL 2008 on one VM server within a failover cluster? I have done that and it seems to work very well. When I failover the VM server housing the SQL server there’s no user impact. I’m not a SQL expert so I’d just like to know the significant differnce. Thanks

Jamie,

I blogged about the difference between clustering SQL and clustering at the VM layer here.

This should tell you everything you need to know, if not I’d be glad to answer any questions.

Best regards,

David Bermingham

[…] The busiest day of the year was August 18th with 347 views. The most popular post that day was Step-by-Step: Configuring a 2-node multi-site cluster on Windows Server 2008 R2 – Part 3. […]

[…] https://clusteringformeremortals.com/2009/10/07/step-by-step-configuring-a-2-node-multi-site-cluster-… This entry was written by byronhu, posted on 2011 年 02 月 09 日 at 01:14:52, filed under SQL Server, SQL Server 2008. Bookmark the permalink. Follow any comments here with the RSS feed for this post. 張貼留言或發出引用通知:引用網址。 « 我還可以安穩 10 年嗎? LikeBe the first to like this post. […]

Very good resource!

I am trying to put my head straight with cluster setup.

Questions: I have a production SQL server running four instances, data storage is split in two LUNs (Logs and Data) on a Compellent Dell SAN. OS is Windows Server 2008 R2 sp1, SQL Server 2008 sp1.

Is it possible to install SQL cluster on those instances? I have tried to setup these instances with cluster but it is installing a new instance of SQL…

Can I have two storage resources for same SQL cluster instance?

Reason I am asking is because we have two LUNs, one for Logs and the other one for Databases; and on the setup it allowed to add only one storage resource (SQL cluster process, for windows everything goes fine).

Last question: I have a SQL server located geographically far from the cluster, whitch has been configured with same instances and mirrored databases. Can I have Windows SQL server 2008 mirror setup from the cluster to this server? If the cluster fails over to the other server; would it brake the mirror?

Any help would be much appreciated.

Questions: I have a production SQL server running four instances, data storage is split in two LUNs (Logs and Data) on a Compellent Dell SAN. OS is Windows Server 2008 R2 sp1, SQL Server 2008 sp1.

Is it possible to install SQL cluster on those instances? I have tried to setup these instances with cluster but it is installing a new instance of SQL…

You cannot convert existing instances into clustered instances, you must install new clustered instances and migrate the databases to the new cluster.

Can I have two storage resources for same SQL cluster instance?

Reason I am asking is because we have two LUNs, one for Logs and the other one for Databases; and on the setup it allowed to add only one storage resource (SQL cluster process, for windows everything goes fine).

Yes, you can add a second volume resource after the cluster is created and move the log files to this volume

Last question: I have a SQL server located geographically far from the cluster, whitch has been configured with same instances and mirrored databases. Can I have Windows SQL server 2008 mirror setup from the cluster to this server? If the cluster fails over to the other server; would it brake the mirror?

Yes, this is a common configuration and will work without breaking the mirror.

Any help would be much appreciated.

WOW!

that is a great article.

just wondering, along with windows cluster, can’t we use peer-to-peer replication with sql enterprise to achieve sql replication/mirroring?

thanks

There are certainly a few ways to move data between SQL Servers, including log shipping, database mirroring, transaction replication and peer to peer replication as you mention. Each solution has strengths and weaknesses, so you have to understand exactly what you want to do. A multisite SQL Server cluster as described in this article is going to give you the most complete protection as it replicates the entire SQL instance. Most of the other techniques I mention replicate at the individual database layer.

The other factor you have to consider is client redirection. With a multisite SQL Server cluster client redirection is automatic and supports any application as the SQL instance name is moved between cluster nodes. The other replication methods are not as robust in terms of client redirection and not every application is going to work in these environments. Of these other methods Database Mirroring comes the closest in terms of automatic failover and client redirection, but it does have limitations such as lack of support for SQL instances that use distribute transactions. Even the new SQL Denali HADRON does not support databases that use distributed transactions, although it does offer some pretty significant enhancements and is worth investigating.

Peer-to-Peer replication is not something that I would consider as an option for high-availability as writes are generally limited to one of the nodes. If that node goes away you have to reconfigure your application and your peer-to-peer replication scheme. In general Peer-to-Peer replication requires a lot more thought and planning vs Database Mirroring or Log Shipping, which are much better suited for simple database replication.

This is the most comprehensive article I’ve found yet on this subject. It’s going to be a big help to me as I am just about to implement this very thing! Thanks for saving me at least several hours!

Much appreciated your response; it saved me lots of wondering.

So far I have installed one SQL instance (clustered) with a mirror to another server.

Now I have three instances to setup in the cluster, and I am still on the process of gathering information and best practices prior deploying it to a production environment.

Questions: How large should be each MS Dtc LUN (one for each SQL instance)? Ex: For an instance 500 GB large and hosting SharePoint, CRM and a few others in-house application databases.

Apart of mapping MS Dtc application to a SQL instance, – I used (msdtc -tmMappingSet -name “Mapping1” -service “MSSQL$SQL2008ENT” -clusterResourceName “MSDTC-Cluster2008Dtc”); is there any other tunning I should do in DTC for best performance and further troubleshooting?

Last question: I have a total of 13 LUNs to setup in my environment; I am planning to use four LUNs for each SQL instance and one for Quorum storage. Each instance is divided in DATA, transaction logs, Backup & Log Files and MS Dtc. Is there and recommendation on this configuration?

Appreciate any help,

Thank you,

Wow – it is probably best to post this question to one of the Windows forums if haven’t already done so, you have a few questions there that I don’t know the answers to off the top of my head, but I’m sure that someone in the forums will be able to answer them before I have the time to look them up. Thanks!

Dave, first off – WONDERFUL article! I very much appreciate your sharing the brain dump.

One thing that I thought you may like to know, that will save you an added step and a bit of time,

and that you didn’t really cover, is setting the SQL Server clustered service General and Failover

parameters on the First node, BEFORE your section “Install the second cluster node.”

ON the PRIMARY (First) NODE (after the “Your SQL failover cluster installation is complete” message)

1) Go to the Windows Failover Cluster Manager and modify the SQL cluster service

2) Under “Services and Applications,” Highlight “SQL Server” and Right-click

3) Select “Properties,” and then, on the “General” tab, under Preferred owners,”

4) Put a check-mark in both nodes (ensure PRIMARY node is listed 1st as “preferred owner”)

5) Ensure the service is set to “auto-start”

6) Click “Failover” tab

7) Choose “Allow failback” button, and “Immediately” button – IF that is right for your environment

[WARNING: IF you are using replication across the WAN, tune these as desired,

Example: Leave “Prevent failback” selected, since you likely want to do “manual failback” in the WAN]

This will make install of the second node easier/faster, as it will form a portion of the SQL Cluster,

(Yes, even before SQL is installed on the 2nd node), making the 2nd node aware

of the already-existing “Service Account settings” from the 1st/Primary node.

Saves you some time and effort from having to re-enter the accounts/passwords on the 2nd node.

I stand corrected – the password information doesn’t get passed through, but the accounts are recognized; so it still appears to pass through some of the information, saving the re-entering of the accounts themselves.

Live and learn!

Note also that Windows, even after you set proper NIC binding order, may give you a bogus error in the “SQL Server Setup – Configuration Check Report” – First off, when you first form the actual “Windows cluster,” the message is, indeed, accurate (in most cases) because, in Microsoft’s infinite wisdom, they place your “Hearbeat NIC” FIRST in the NIC binding order, moving your actual cluster/domain NIC to the 2nd position in the network binding order. BUT, even after you correct the binding order (via a HIDDEN “Alt-N” command in Network and Sharing Center, Change Adapater Settings), AND then you fully reboot everything, STILL the “binding order” is reported as incorrect – clearly a Microsoft bug.

“IsDomainNetworkTopOfBindings Checks to see if the computer domain server is on the network that is bound to the top of the network order. Warning The domain network is not the first bound network. This will cause domain operations to run slowly and can cause timeouts that result in failures. Use the Windows network advanced configuration to change the binding order.”

There may be a registry fix or SP for this (have yet to put SQL 2008 R2 SP1 on – will do that in a few moments).

I have seen this same bug on a pretty consistent basis. Thanks for pointing that out!

[…] SQL Server 2008 multi-site cluster on Windows Server 2008 R2 https://clusteringformeremortals.com/2009/10/07/step-by-step-configuring-a-2-node-multi-site-cluster-… […]

[…] SQL Server 2008 multi-site cluster on Windows Server 2008 R2 https://clusteringformeremortals.com/2009/10/07/step-by-step-configuring-a-2-node-multi-site-cluster-… […]

Excellant step-by-step article Dave!! I was able to easily follow thru the steps and get the cluster going. Thanks!!

Hi,

Can you please let me know how is the hardware configuration required if I want to install Cluster (through VMWare or through Starwind Virtual Environment)? How much memory, disk and other configuration parameter take vital role into this?

Regards.

not sure what you mean.

I meant, if I want to install Windows Cluster on a virtual environment using VMWare, how much memory, disk I need to have as a min set of requirement so that I can set up the Cluster.

Does this make sense?

[…] http://blogs.msdn.com/b/clustering/archive/2008/01/18/7151154.aspx SQL Cluster : https://clusteringformeremortals.com/2009/10/07/step-by-step-configuring-a-2-node-multi-site-cluster-… Network Load Balancing Share this:TwitterFacebookLike this:LikeBe the first to like this […]

Hi,

great article..We are planning to implement the same but with 3 node clustering. 1 node as a DR (multi site), but within the same cluster. we are implementing SAN replication. My question is how and where do you tell the sql server node to use mirrored SAN on the DR site..

Thanks in advance,

Phani

In order to use SAN replication your SAN provider must provide some sort of cluster integration. Sometimes this is a helper resource that rides on top of the disk resource or sometimes the disk resource is replaced entirely. Simply because you have SAN replication does not automatically mean you can build a multisite cluster – it must have cluster integration. For example, EMC’s SRDF is a great SAN replication product, however, if you want to build a multisite cluster you will need SRDF CLuster Enabler. Best to speak with your hardware provider. If they don’t have an answer you can always use a host based replication such as DataKeeper CLuster Edition as those types of solutions work with any storage type.

Great article.There are few people who write on different and rare stuffs which are tough to experiment.You are one of them.I had this query if we can set failover cluster across different physical sites from the very first day I had set up SQL Server failover cluster and your explanation had answered all my queries.

Many thanks once again.

Regards,

Zainu

Hello DaveBerm, very good Article.

I was trying to see the limites of such architecture.

The volume réplication using SIOS DataKeeper is a 1 to 1 replication design. Which means that the cluster is limited to 2 nodes if using local drives at each site. am i right?

Second question: imagine i have 2 nodes on one site using shared volumes and another 1 node on the other site with his own volume. How can i setup the replication from shared volume to the single? and above all i wonder how the failover and failback will proceed?

These questions are mainly about scaling out in order to provide high availability from a local site perspective first, then intregrated with Disasster Recovery and still High availability.

Regards

Thanks for the note. This article illustrates a 1 to 1 design, but by no means is that the limits of the application. DataKeeper supports multiple targets, so you could build 1 x 1 x 1 x 1 type designs. It also supports a mixed shared/replicated model, so you can build 2 x 1 or 2 x 2 type models as well. I’ve written about other configurations in some of my other articles. Here is one of those articles:

If you have any questions or opportunities let me know and I’d be glad to get on the phone with you.

Best regards,

Dave Bermingham

Hi,

what happens if i create my own custom resource group name instead of letting SQL create it for me?

I’ve never tried. What is wrong with the default name? If you do it, let me know how it works out for you.

[…] that you say, you don’t have a SAN? Or you’d rather build a solution that eliminates the SAN as a single point of failure and instead allows you to use data replication […]

Thank you for the post. My first Geographically Dispersed SQL Server Instance (GeDiSSIn in short) is up and running. I’m using SteelEye for my supporting database servers (Distributor, Mirror Witness and General DBA Management Instances) as Microsoft does not have a way to mirror the distributor database and/or the mirror witness functionality.

Regards

Christo Pretorius

Does a SQL Server 2008 R2 geo-cluster (multi-site cluster) is possible with Standard Edition?

Yes, a 2-node multisite cluster is possible with SQL Server Standard Edition. Of course, you will have to span your subnet as SQL 2008 R2 does not support cluster nodes in different subnets. And what I just found out is that even with SQL 2012 Standard edition you will still need to span subnets. The cross-subnet failover feature in SQL 2012 is only available in the Enterprise Edition.

Node 1 is a SQL replication publisher doing Merge, Transact, and Snapshot to its subscribers. In the event of failure, Node 2 will become the publisher to those same subscribers.

What SQL files will I need to replicate with SteelEye DataKeeper Cluster?

The MDF, LDF of the all databases, and the distributer DB? Is that it?

All of the system and user defined databases and log files should reside on the replicated volumes, same as a traditional shared storage cluster.

Hello.

These articles are excellent, gave a much clear picture about cluster solution in Win 2008 and SQL. But I’ve question. What happens when you have a metro mirror replication between two sites (different locations) and a file over occurs?.

How the passive node knows that the replicated disk is the primary?

Regards.

Cristian

Excellent Post…! Thanks alot.

But Instead of Data Keeper can I use any alternate which is freely downloadable or any thing offered by Microsoft.

Microsoft does not have a host based replication solution for clustering nor is there anything freely available for download.

Dear Daveberm,

Does two node clustering requires domain

Yes, Microsoft clustering requires a domain. There are 3rd party cluster solutions like SPS for Windows that don’t require AD. http://us.sios.com/wp-content/uploads/2011/06/SteelEye-Protection-Suite-for-Windows.pdf

Hello, in the step where mentioned above “Do not move the instance root directory to the replicated volume.”…is it recommended to leave it as is in the C drive path that it defaults to and to not change (& if yes why?). Thanks in advance.

Standard cluster configuration applies. The binaries reside on local storage, not cluster storage.

am having two site servers but i want a single database to use in case of main server to secondary server connection failure that two nodes working as servers independendly

By hariesh S

This is what a multisite cluster handles. If one site fails the other comes online. Did you have a specific question?

How do you setup a SQL Fenced Cluster and how can it be extended (adding more hosts to the cluster)?

Not sure what you mean by fenced cluster?

Your post save my life.

Tks